E-E-A-T: More than an introduction to Experience ,Expertise, Authority, Trust

There are many definitions and explanations of E-E-A-T, but few are truly tangible. This article aims to explain the topic of E-E-A-T more concretely and clear up misunderstandings surrounding E-E-A-T. (German Article >>> Eine Einführung in E-E-A-T: Bedeutung für Google-Ranking & SEO )

Contents

- 1 What is E-E-A-T?

- 2 What is E-E-A-T not?

- 3 E-E-A-T as a concept for brand identification and quality assurance

- 4 In which areas can Google use E-E-A-T?

- 5 Which measurable factors can influence E-E-A-T?

- 6 E-E-A-T evaluation via vector space analyses

- 7 E-E-A-T rating: A mixture of Coati (Panda), links & entity signals

- 8 The Role of E-E-A-T in the Ranking-Process at Google

- 9 E-E-A-T as a meta-level ranking influence.

- 10 What does YMYL mean in relation to E-E-A-T?

- 11 How important is E-E-A-T for non-YMYL topics?

- 12 What are the Quality Rater Guidelines and why are they closely linked to E-E-A-T?

- 13 Experience + Expertise= relevance of the entity

- 14 Authority = importance of the entity with regard to a topic / industry

- 15 Trust = reputation of the entity

- 16 As an author or publisher, can you also be rated well in terms of E-E-A-T in multiple areas?

- 17 Why is E-E-A-T so important for Google?

- 18 Frequently asked questions about E-E-A-T

- 19 More reading tips and videos on E-E-A-T assessment and YMYL

What is E-E-A-T?

E-E-A-T is Google’s quality concept and combines components from offpage evaluation methods (e.g. PageRank), onpage content evaluation methods (e.g. Coati, Panda) and evaluation of entities (authors and organizations). Until December 2022, E-E-A-T was still called E-A-T.

E-E-A-T stands for the three terms expertise, authority and trust and serves as a guideline for search evaluators to rate search results. The E-E-A-T rating plays a very important role especially for Your-Money-Your-Life in short YMYL pages. The E-E-A-T rating was mentioned for the first time in the Quality Rater Guidelines from 2015. Through the E-E-A-T concept, content is rated on the credibility, expertise, and authority of the source as well as the content in terms of quality. The E-E-A-T rating refers to the Main Content (MC), the Suplementary Content as well as the author, publisher or the source in general.

What is Experience?

Experience stands for the first E in E-E-A-T. “Experience” means that the quality of the content is also evaluated from the point of view of the extent to which the content creator has first-hand experience with the topic.

What is Expertise?

Expertise stands for the second E in E-E-A-T. Expertise stands for the knowledge and experience that an author or publisher possesses. Expertise refers only to the author or publisher.

What is Authority?

Authority stands for the letter A in E-E-A-T. Authority stands for the notoriety of an author, the website or the content in the respective topic area.

What is Trust?

Trust stands for the letter T at E-E-A-T. Trust stands for the credibility of an author, the website or the content in the respective topic area. For Google, trust is at the heart of the E-E-A-T concept.

What is E-E-A-T not?

E-E-A-T is not a ranking factor or a ranking system. E-E-A-T is a theoretical concept that summarizes various factors and signals that ultimately provide a pattern for trustworthy websites and source entities in terms of the E-E-A-T concept. Accordingly, there is no E-E-A-T score.

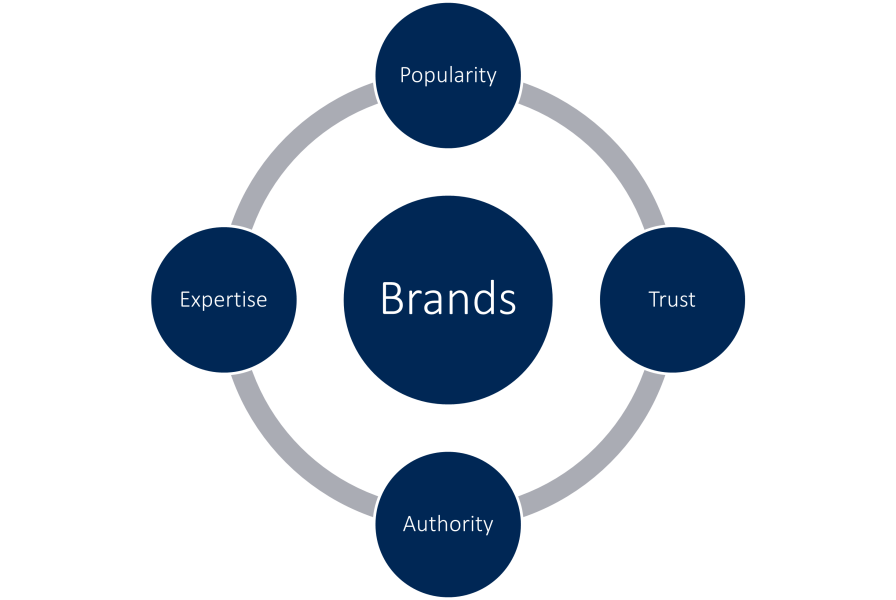

E-E-A-T as a concept for brand identification and quality assurance

E-E-A-T is Google’s own concept for identifying publishers and authors who are a brand. In this way, Google wants to improve the quality of search results and thus the user experience with the SERPs.

Google itself distinguishes between relevance and quality. Relevance is always related to the search query and refers to the document in question. (More about relevance in the article Relevance, Pertinence and Usefulness at Google). Quality can refer to the content quality of the document or the publisher or author. Brand concepts can only be used for the second look at the E-E-A-T concept.

Brand characteristics

A brand has the following essential characteristics:

- Awareness

- trust

- topical authority

- topical expertise and competence

Three of these characteristics are also found in E-E-A-T. Mere popularity is not a useful attribute for determining quality, as it is disconnected from the topic. Transferred to Google, the search volume for a brand is therefore not necessarily decisive for E-E-A-T.

More in the article E-A-T & TOPICAL BRAND POSITIONING AS A CRITICAL SUCCESS FACTOR IN SEO

In which areas can Google use E-E-A-T?

E-E-A-T can be used in many areas of Google to improve the quality of search results.

Possible areas for E-E-A-T can be:

- Evaluation of Content: Evaluation of individual content and documents with regard to first hand experience and expertise.

- Topical Evaluation of domains and website areas: Evaluate domains and website areas in terms of topical authority.

- Evaluation of source entities: Evaluation of organizations, people or authors with regard to topical authority and brand strength.

- Filtering for indexing: Classification and identification of sources worth crawling.

- Classification for crawling: Classification and identification of sources worth crawling.

- Identifying and classifying sources for training LLMs: Identifying seed ressources for extracting trainingdata. Research has shown that AI-generated answers in SGE do not correlate with the ranking results. So the classic ranking factors don’t play a role here. An alternative choice herefore would be E-E-A-T.

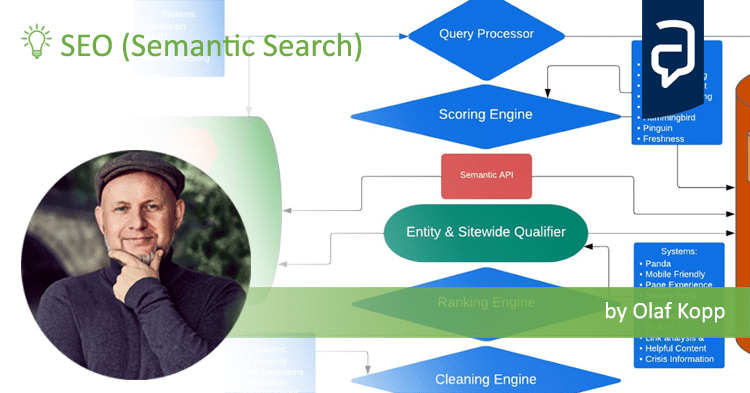

Role and influence of E-E-A-T on Google search

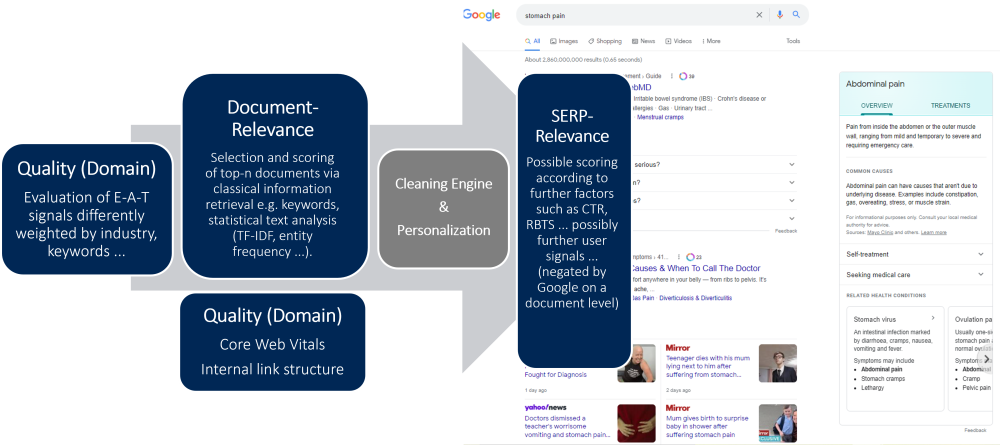

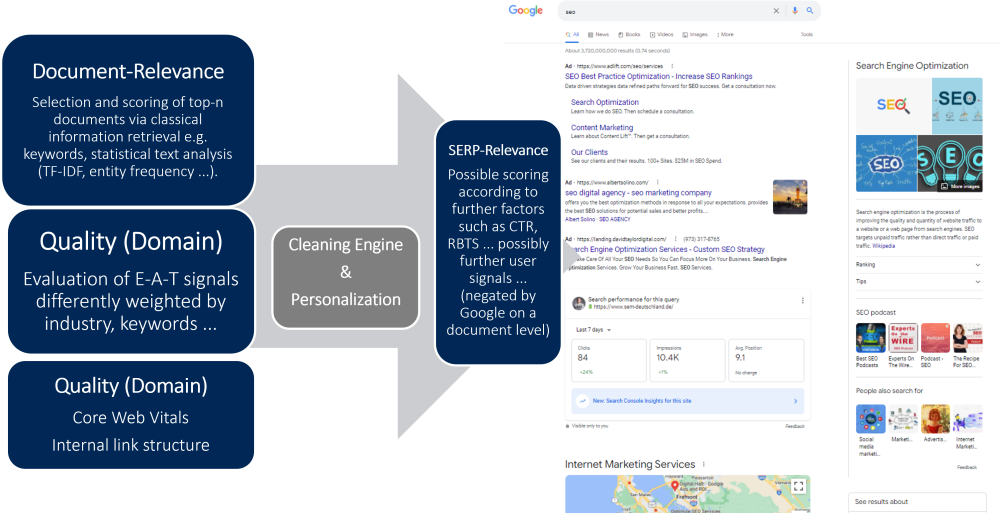

Basically, Google ranking can be distinguished between two areas.

Relevance: Relevance refers in the ranking to the document in relation to the search query. A content is relevant if it fits the search intention. More on relevance in the post Relevance, Pertinence and Usefulness on Google and on Search Intent in the post What is Search Intent, Search Intent & how to identify it?

Quality (E-E-A-T): When Google talks about quality, it often refers to the quality of an entire domain, individual website sections or all content on a particular topic. In contrast to relevance, quality refers to a more holistic view. E-E-A-T is Google’s quality concept.

E-E-A-T can have an influence on the following Google areas:

- Rankings in classic Google search (confirmed by Google)

- Insertion of rich snippet elements

- Review / stars

- FAQ snippets

- Insertion of sitelinks

- Featured snippets, if applicable

- Display in Google Discover (confirmed by Google)

- Display in Google News (confirmed by Google)

- Indexing of content (indirectly confirmed by Google)

- Helpful Content System (comfirmed by Google)

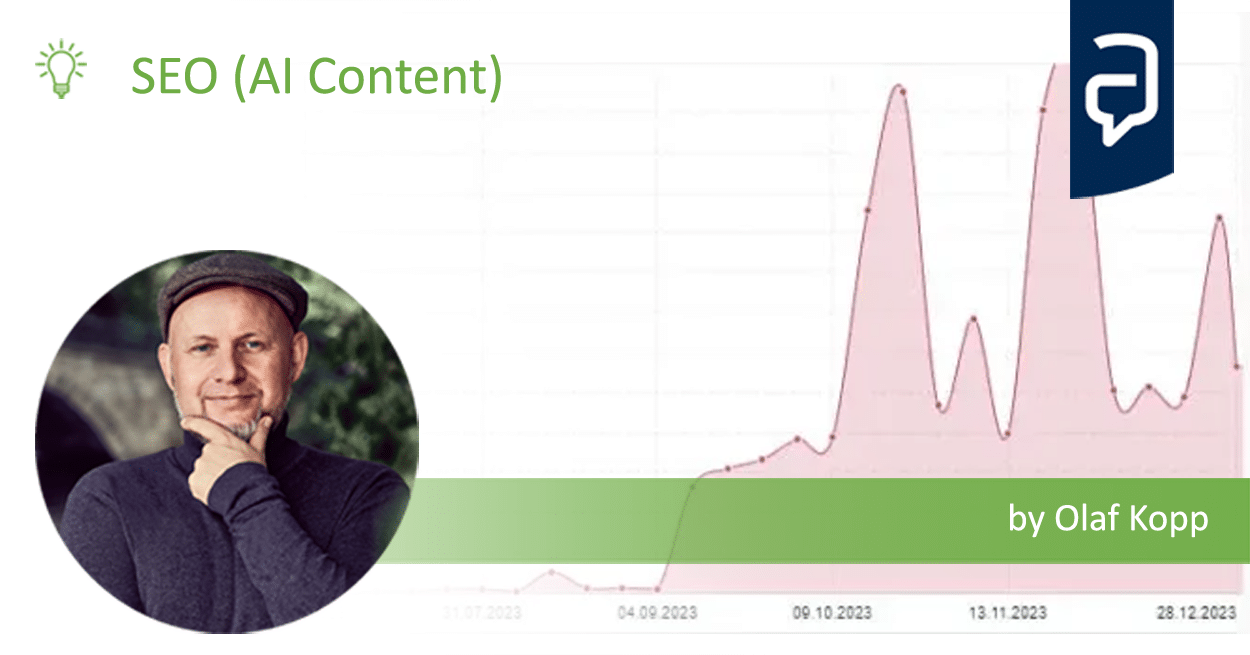

- Scalable weeding out of sources with mass-produced, low-quality AI content

- Possible future delivery of results in Snapshot AI Box based on Expertise, Authority and Trust.

- Future delivery of results in Perspectives based on expertise and experience (confirmed by Google)

- Possible delivery via Shopping Graph based on trust and authority

E-E-A-T in terms of crawling and indexing

With the triumph of AI tools like ChatGPT or jasper.ai for content creation, Google faces a major challenge. On the one hand, Google has to use its own crawling resources efficiently. This means that Google does not want to crawl all content accessible online. The same applies to indexing. It makes no sense for Google to include inferior content in the search index. The more content Google indexes and has to process in the information retrieval process, the more computing power is needed.

Google performs document scoring only on the top n documents of a thematically relevant document corpus, the rest is not ranked for efficiency reasons. Still, unneeded content costs unnecessary storage, parsing and rendering resources.

E-E-A-T can help Google to rank based on entities or domain and author level applied in the big scale without having to crawl every single content. At this macro level, content can be classified according to the author entity and given more or less crawling budget. In addition, Google can exclude entire content groups from indexing via this path.

Google has repeatedly emphasized that quality plays a special role in crawling and indexing (see here and here).

Which measurable factors can influence E-E-A-T?

Disclaimer: E-E-A-T signals not officially verified by Google

First of all, I would like to make it clear that the factors listed below for an E-E-A-T rating are largely not confirmed by Google. But they are also more than an opinion, as I have researched them from various Google patents, white papers and scientific papers from Google. It is therefore more well-founded than much of what you hear and read in the industry. I have provided the sources for each factor so you can read more deeply if you like.

Possible factors for an E-E-A-T rating can be.

Offpage E-E-A-T Factors:

- PageRank or references to the author / publisher

- Distance to trust seed sites in link graph

- Backlink anchor text

- Different signals for author/publisher credibility

- how long the author has been producing content in a subject area

- Awareness of the author

- Ratings of published content by users

- If the author’s content is published by another publisher with above-average ratings

- The number of content published by the author

- How long ago the author last published

- Ratings of previous publications on a similar topic by the author

- Author’s experience based on time

- Number of content published on a topic

- Elapsed time to last release

- Mentions of the author / publisher in award and best-of lists

- Click-through rate on an author’s/publisher’s content

- Sentiment around mentions or ratings

- Co-occurrences of the author / publisher with thematically relevant terms in videos, podcasts & documents

- Co-occurrences of the author / publisher with thematically relevant terms in search queries

- Percentage of content that an author / publisher has contributed to a thematic document corpus

Onpage E-E-A-T Factors:

- Transparency to the author / publisher via author profiles & about-us pages

- Links to own references

- Use of https on the domain

- Knowledge Based Trust (agreement with conventional wisdom and facts)

- Overall quality of website content (helpful content)

- Extent of the created content sitewide

- Quotations and external link references to authoritative sources

- Total user interactions with the created content

In the article 14 ways Google may evaluate E-A-T, I specifically discuss possible factors for the E-E-A-T rating.

Based on the Google patents, sientific papers, our own experience and statements from Google, we have created the following overview of possible measurable factors for an E-E-A-T rating. Mor on on my research you can find in my article The most interesting Google patents and scientific papers on E-E-A-T

Feel free to download, print and share it. Thank you!

E-E-A-T evaluation via vector space analyses

The signals listed here can be applied in aggregate to source entities such as authors or organizations as well as domains. In this context, the two Google patents Website Representation Vector and Generating author vectors are particularly exciting. The second patent describes how authors and their content can be identified and mapped into vectors by training neural networks.

The signals listed here can be applied in aggregate to source entities such as authors or organizations as well as domains. In this context, the two Google patents Website Representation Vector and Generating author vectors are particularly exciting. The second patent describes how authors and their content can be identified and mapped into vectors by training neural networks.

The first patent describes how websites/domains are mapped as vectors and can be classified according to the classes expert, apprentice, layman for specific knowledge domains. This classification system can be used as a benchmark for the evaluation of new content. Thus, a classification according to thresholds in the sense of E-E-A-T could be carried out in a scalable way on authors, companies and on domain level, taking into account, the signals listed here in the sum.

These two Google patents give an idea of how a methodology works in terms of E-E-A-T related to authors, companies and at the domain level considering that the signals listed here can be carried out scalably in the sum.

If domains or source entities are mapped in vectors, identified and assigned to topics, they can be evaluated via measurable signals with regard to E-E-A-T. This creates an image of the extent to which the source is trustworthy in relation to a topic and can be classified into different authority or trust levels. Such a level class could be e.g. Expert, Apprentice or Layman. Depending on the level, content from the source then receives a ranking bonus via a quality classifier in addition to the relevance scoring (document scorer) of the individual content.

For YMYL topics, the source must have a certain level in order to be able to rank with content on the first search result page.

E-E-A-T rating: A mixture of Coati (Panda), links & entity signals

The theories explained in this section are not endorsed by Google but make sense in the historical context of developments in Google search, and some are listed in Google patents and scientific papers.

3 years before Google first introduced E-A-T in the Quality Rater Guidelines, the search engine provider rolled out a quality update called Panda in 2011, which addressed the quality of website content overall. At the SMX 2022 Keynote Hyung-Jin Kim, the VP of Search at Google, explained, that Panda was replaced by an update to Coati. Coati is a new version of Panda and part of the core ranking algorithm.

In 2012, Google rolled out another quality update called Penguin which was related to the detection and penalization of unnatural links.

Both updates were similar in procedural methodology to the Core Updates we’ve seen from Google since 2018 and closely related to E-E-A-T. Both Coati (Panda), Penguin and the Core Update are/were rolled out in single major releases and were quality updates designed to prevent certain websites from making it to the top search results pages. They are also all updates that were more or less topically related.

With the Hummingbird update in 2013, Google created the basis not only to better understand documents and search queries, but also to make an E-E-A-T evaluation of entities such as authors and publishers possible. Thus, E-E-A-T is an integral part of Google’s journey to becoming a semantic search engine.

Because of this, I think Panda and Penguin served as preparation for what Google was going to do with E-A-T years later.

It is possible that parts of the updates at that time were incorporated into the algorithms for the E-E-A-T rating. This was then joined by other signals that are used for entity evaluation with respect to E-E-A-T. (More about this in the article Entities & E-A-T: The role of entities in authority and trust )

The Role of E-E-A-T in the Ranking-Process at Google

E-E-A-T is to be understood as a kind of meta-evaluation of a publisher, author or the associated domain in relation to one or more subject areas. In contrast, Google evaluates the relevance at the document level, i.e. each individual piece of content in relation to the respective search query and its search intention. One can say that Google evaluates the quality of a publisher/author via E-E-A-T and the relevance via classic information retrieval methods such as text analysis in combination with machine learning innovations such as Rankbrain and natural language processing.

Similar to the cleaning engine, E-E-A-T can be understood as a kind of layer that is applied to a list of search results sorted by a score. Based on the relevance signals, Google evaluates the content from the index in the scoring process at document level and then uses the collected quality signals to check the expertise, authority and trust of the sources. Accordingly, there is then a ranking bonus or a downgrade of the search results previously sorted by relevance.

.

E-E-A-T as a meta-level ranking influence.

Many SEOs focus on the document level for E-E-A-T and believe optimizing a single piece of content would improve an E-E-A-T rating. Google, in the Quality Rater Guidelines, also refers to evaluating the Main Content, but mainly performs an evaluation of the Content Creator.

In my opinion, the content of a website plays a role primarily in the sum of the content. Similar to Panda, the quality of the sum of the content in a thematic area is evaluated.

Therefore, E-E-A-T plays a role mainly on domain and source entity level. E-E-A-T is rather to be understood as a sitewide or topicwide layer, which is used after relevance scoring on document level.

What does YMYL mean in relation to E-E-A-T?

YMYL is the abbreviation for Your-Money-Your-Life. YMYL can be related to the topic of a content as well as to the source/domain. So-called YMYL pages include websites and content on the topics:

- News and Current Events: News on important topics such as international events, business, politics, science, technology, etc. Note that not all news articles are necessarily considered YMYL (e.g., sports, entertainment, and everyday lifestyle topics are generally not YMYL). Please use your judgment and knowledge of your locale. This is where content and publishers play a key role, especially in Google News searches.

- Government and Law: Information important to maintaining an informed citizenry, such as information about voting, government agencies, public facilities, social services, and legal issues (e.g., divorce, child) custody, adoption, creating a will, etc.).

- Finance: Financial advice or information about investing, taxes, retirement planning, loans, banking, or insurance, especially websites where people can make purchases or transfer money online.

- Shopping: Information or services related to researching or purchasing goods/services, specifically. Websites where people can make purchases online.

- Health and Safety: advice or information about medical issues, medications, hospitals, emergency preparedness, how dangerous an activity is, etc.

- Groups of people: Information about or claims related to groups of people, including but not limited to those grouped by race or ethnicity, religion, disability, age, national origin, veteran status, sexual orientation, gender, or gender identity.

- Other: there are many other topics that relate to major decisions or important aspects of people’s lives considered YMYL, such as fitness and nutrition, housing information, choosing a college, finding a job, etc.

As can be seen, large portions of the Internet and online content fall into the group of YMYL sites.

For YMYL topics, E-E-A-T plays a very large role and is a gatekeeper for the first 10 to 20 search results.

How important is E-E-A-T for non-YMYL topics?

Google has clearly stated the importance of E-E-A-T for YMYL topics in the Quality Rater Guidelines and various other statements. How important E-E-A-T is for non-YMYL topics remains a matter of speculation. It is possible that E-E-A-T is included in the ranking on an equal footing with the classic information retrieval methods.

What are the Quality Rater Guidelines and why are they closely linked to E-E-A-T?

E-A-T was first mentioned in the Quality Rater Guidelines in 2014.

The Quality Rater Guidelines are a detailed document that Google provides to search evaluators, or quality raters, to evaluate the search results experience after implementing an algorithm change.

The term Quality Rater Guidelines has not been used by Google for some time. The official name is “Search Quality Rater Guidelines” or General Guidelines.

Learn more about Google’s Quality Rater Guidelines here, here and here. (Here an introduction to the quality rater guidelines in german language)

Experience + Expertise= relevance of the entity

An expert is someone who has specialized knowledge in one or more specific areas. The following definition can be found in the Wikipedia:

An expert is somebody who has a broad and deep understanding and competence in terms of knowledge, skill and experience through practice and education in a particular field.

Expertise means having expert knowledge and, according to QRG, can refer to both an author and a content editor. I also like to call it entity relevance. Entity relevance ensures that Google considers a domain/publisher or author as a possible source for a given topic.

Combined with a document’s relevance, entity relevance can be the deciding factor in getting into the top n documents for ranking in the first place. A source or entity that has no relevance or expertise to a topic will have a much harder time being included in the top n search results.

For more information on determining the relevance of documents, I wrote the article How can Google identiand rank relevant documents via entities, NLP & vector space analysisfy?

Authority = importance of the entity with regard to a topic / industry

An authority is defined as follows according to Wikipedia:

The authoritarian personality is a personality type characterized by a disposition to treat authority figures with unquestioning obedience and respect.

In other words, an authority is recognized as an expert thus possesses entity relevance, has a good reputation and great influence. An authority belongs to the relevant set in an industry or topic. Transferred to Google, it is a source that should be listed on the first search results pages because the user expects it. Especially with YMYL topics (our Money Your Life) this criterion plays a major role.

Trust = reputation of the entity

Trust is closely related to the reputation of a person or source. The following is written about reputation in the Wikipedia:

Trust is the willingness of one party (the trustor) to become vulnerable to another party (the trustee) on the presumption that the trustee will act in ways that benefit the trustor.

Reputation has a similar meaning in context of search engines:

The reputation of a social entity (a person, a social group, an organization, or a place) is an opinion about that entity typically as a result of social evaluation on a set of criteria, such as behaviour or performance.

Reputation is about prestige. It means the perception of a person or organization from the outside. Reputation and trust are closely and directly related. About this in the QRG:

Reputation is an important criteria when using the High rating, and informs the E-A-T of the page. While a page can merit the High rating with no reputation, the High rating cannot be used for any website that has a convincing negative reputation. Remember that when doing research, make sure to consider the reasons behind a negative rating and not just the rating itself.

One of the most common questions about E-E-A-T is whether you can have authority, trust, and expertise in multiple subject areas in the eyes of Google. The question can be answered with a vague “it depends”. E-E-A-T is a topic-related ranking factor on Google. Theoretically, it is possible to be ranked well in different topics regarding E-E-A-T. Especially when topics are semantically adjacent. However, an SEO who wants to position himself as a psychologist at the same time will have a hard time, because he has to build up two different identities in order to be perceived as an authority and expert in both areas. In addition, care should then also be taken to ensure that both identities are clearly segmented from one another in different ontologies with different profiles, digital representations and content.

Why is E-E-A-T so important for Google?

The consideration of author and publisher in terms of credibility and expertise has become increasingly important for Google, in particular due to social pressure on Google, among other things, as a result of the discussions surrounding fake news. But also for the user experience, a high degree of accuracy and relevance is a top priority for search engines. Read more in the article INSIGHTS FROM THE WHITEPAPER “HOW GOOGLE FIGHTS MISINFORMATION” ON E-A-T AND RANKING

The E-E-A-T ratings introduced in version 5.0 of the Quality Rater Guidelines as part of the PQ rating in 2015 show how important the factors of relevance, trust and authority are for Google.

The Quality Rater Guidelines list the following important criteria for evaluating a website:

- The Purpose of the Page

- Expertise, Authoritativeness, Trustworthiness: This is an important quality characteristic. Use your researchon the additional factors below to inform your rating.

- Main Content Quality and Amount: The rating should be based on the landing page of the task URL.

- Website Information/information about who is responsible for the MC: Find information about the website aswell as the creator of the MC.

- Website Reputation/reputation about who is responsible for the MC: Links to help with reputation researchwill be provided.

Expertise, authority and trustworthiness are currently described in the Quality Rater Guidelines as follows:

- The expertise of the MC creator.

- The authority of the MC creator, the MC itself and the website.

- The trustworthiness of the MC creator, the MC itself and the website.

From 2018 till today there were some major core updates from Google that suggest that signals that feed into the concept of E-E-A-T have become significantly more important for ranking. There are no concrete indications from Google as to exactly which signals feed into E-E-A-T. However, there are some indirect indications from white papers and patents as well as the Quality Rater Guidelines and Search Quality Evaluator Guidelines already mentioned.

Google has been under a lot of pressure for a few years now regarding misinformation in search results, which is underlined by the whitepaper “How Google fights Disinformation” presented at the Munich Security Conference in February 2019. Read more in the article .

Google wants to optimize its own search system to provide great content for the respective search queries depending on the context of the user and taking into account the most reliable sources. The Quality Raters play a special role here.

„We publish publicly available rater guidelines that describe in great detail how our systems intend to surface great content… we like to say that Search is designed to return relevant results from the most reliable sources available… But notions of relevance and trustworthiness are ultimately human judgments, so to measure whether our systems are in fact understanding these correctly, we need to gather insights from people.“ Source: https://blog.google/products/search/raters-experiments-improve-google-search/

The evaluation according to E-E-A-T criteria plays a central role for the Quality Raters.

„They evaluate whether those pages meet the information needs based on their understanding of what that query was seeking, and they consider things like how authoritative and trustworthy that source seems to be on the topic in the query. To evaluate things like expertise, authoritativeness, and trustworthiness—sometimes referred to as “E-A-T”—raters are asked to do reputational research on the sources. „Quelle: https://blog.google/products/search/raters-experiments-improve-google-search/

For the customer experience when using the search engine, a high quality of search results is the focus for Google. This becomes clear when one takes a look at statements made by various Google spokespersons regarding a quality factor at the document and domain level.

For example, the following statements by Paul Haahr at SMX West 2016 in his presentation How Google works: A Google Ranking Engineer’s Story:

“Another problem we were having was an issue with quality and this was particularly bad (we think of it as around 2008 2009 to 2011) we were getting lots of complaints about low-quality content and they were right. We were seeing the same low-quality thing but our relevance metrics kept going up and that’s because the low-quality pages can be very relevant this is basically the definition of a content form in our vision of the world so we thought we were doing great our numbers were saying we were doing great and we were delivering a terrible user experience and turned out we weren’t measuring what we needed to so what we ended up doing was defining an explicit quality metric which got directly at the issue of quality it’s not the same as relevance …. and it enabled us to develop quality related signals separate from relevant signals and really improve them independently so when the metrics missed something what ranking engineers need to do is fix the rating guidelines… or develop new metrics.”

This quote is from the part of the presentation on Quality Rater Guidelines and E-E-A-T. In this talk, Paul also mentions that Trustworthiness is the most important part of E-E-A-T. According to Paul’s statements, the criteria mentioned in the Quality Rater Guidelines for bad and good content and websites in general are the blueprint for how the ranking algorithm should work.

Also from 2016 is the following statement by John Mueller from a Google Webmaster Hangout:

For the most part, we do try to understand the content and the context of the pages individually to show them properly in search. There are some things where we do look at a website overall though.

So for example, if you add a new page to a website and we’ve never seen that page before, we don’t know what the content and context is there, then understanding what kind of a website this is helps us to better understand where we should kind of start with this new page in search.

So that’s something where there’s a bit of both when it comes to ranking. It’s the pages individually, but also the site overall.

I think there is probably a misunderstanding that there’s this one site-wide number that Google keeps for all websites and that’s not the case. We look at lots of different factors and there’s not just this one site-wide quality score that we look at. So we try to look at a variety of different signals that come together, some of them are per page, some of them are more per site, but it’s not the case where there’s one number and it comes from these five pages on your website.

Here, John emphasizes that in addition to the classic relevance ratings, there are also rating criteria for the complete website that relate to the thematic context of the entire website. This means that there are signals that Google uses to classify the entire website thematically as well as to evaluate it. The proximity to the E-E-A-T rating is obvious.

In addition, Google confirmed in April 2020 the closeness of the E-E-A-T concept to the ranking signals that the ranking algorithm takes into account, or that webmasters should evaluate their own content according to E-E-A-T.

„We’ve tried to make this mix align what human beings would agree is great content as they would assess it according to E-A-T criteria. Given this, assessing your own content in terms of E-A-T criteria may help align it conceptually with the different signals that our automated systems use to rank content.“

In the Quality Rater Guidelines, you can find this passage that emphasizes the importance of E-E-A-T:

“Expertise, Authoritativeness, Trustworthiness: This is an important quality characteristic. …. Important: Lacking appropriate EAT is sufficient reason to give a page a Low quality rating.”

Also in the already mentioned Google whitepaper you can find various passages about E-E-A-T and the Quality Rater Guidelines:

“We continue to improve on Search every day. In 2017 alone, Google conducted more than 200,000 experiments that resulted in about 2,400 changes to Search. Each of those changes is tested to make sure it aligns with our publicly available Search Quality Rater Guidelines, which define the goals of our ranking systems and guide the external evaluators who provide ongoing assessments of our algorithms.”

“The systems do not make subjective determinations about the truthfulness of webpages, but rather focus on measurable signals that correlate with how users and other websites value the expertise, trustworthiness, or authoritativeness of a webpage on the topics it covers.”

“Ranking algorithms are an important tool in our fight against disinformation. Ranking elevates the relevant information that our algorithms determine is the most authoritative and trustworthy above information that may be less reliable. These assessments may vary for each webpage on a website and are directly related to our users’ searches. For instance, a national news outlet’s articles might be deemed authoritative in response to searches relating to current events, but less reliable for searches related to gardening.”

“Our ranking system does not identify the intent or factual accuracy of any given piece of content. However, it is specifically designed to identify sites with high indicia of expertise, authority, and trustworthiness.”

“For these “YMYL” pages, we assume that users expect us to operate with our strictest standards of trustworthiness and safety. As such, where our algorithms detect that a user’s query relates to a “YMYL” topic, we will give more weight in our ranking systems to factors like our understanding of the authoritativeness, expertise, or trustworthiness of the pages we present in response.”

The following statement is particularly exciting. There it becomes clear how powerful E-E-A-T can be in certain contexts and in relation to events compared to classical relevance factors.

“To reduce the visibility of this type of content, we have designed our systems to prefer authority over factors like recency or exact word matches while a crisis is developing.“

The effects of E-E-A-T could be observed in the last few years on various Google Core updates associated with E-E-A-T.

Frequently asked questions about E-E-A-T

The following questions are frequently asked regarding E-E-A-T:

Can structured data help with an E-E-A-T evaluation?

Yes, structured data helps Google to more quickly identify relationships between entities and attributes, i.e. entity-attribute-value pairs and/or relationships between entities. This can lead to a faster understanding of a source entity in terms of thematic context. However, structured data can be easily manipulated- Therefore, they are not well suited for evaluation in terms of E-E-A-T. According to Google, structured data is not a ranking factor. Furthermore, Google uses structured data as manually labeled training data. This data helps the machine learning algorithms to recognize patterns and extract information about entities in the future, even without structured data.

Is E-E-A-T a ranking factor?

According to Google, E-E-A-T itself is not a stand-alone ranking factor with a score, but a mix of different factors that play into the E-E-A-T concept and result in a pattern as a whole.

“After identifying relevant content, our systems aim to prioritize those that seem most helpful. To do this, they identify a mix of factors that can help determine which content demonstrates aspects of experience, expertise, authoritativeness, and trustworthiness, or what we call E-E-A-T.” Quelle: https://developers.google.com/search/docs/fundamentals/creating-helpful-content?hl=en

“While E-E-A-T itself isn’t a specific ranking factor, using a mix of factors that can identify content with good E-E-A-T is useful. For example, our systems give even more weight to content that aligns with strong E-E-A-T for topics that could significantly impact the health, financial stability, or safety of people, or the welfare or well-being of society. We call these “Your Money or Your Life” topics, or YMYL for short.”Quelle: https://developers.google.com/search/docs/fundamentals/creating-helpful-content?hl=en

E-E-A-T is more of a classifier composed of a set of signals that give a picture of quality and trust. Expert or authority or not is the question…

Can an E-E-A-T rating deteriorate over time?

If you have not published content on a topic for a long time, the E-E-A-T rating of an entity or a publisher/author will decline over the years. From my own experience, however, this can take several years.

If the future is more about brand, personality and authority, how likely is it that a market for some kind of authority source management will emerge, similar to the rating management field? After all, I would theoretically “win” if I controlled a high-quality source and then offered it as an EEAT platform.

Some kind of authority management is becoming more and more important in my opinion. We should always keep an eye on our brand online and regularly check if our thematic brand positioning is consistent. Is our brand named along with the thematically relevant terms, appropriate to the topics you want to position yourself for. Cooccurrences of entity or brand names should occur as frequently as possible.

How does it look with different topics, so if an author writes e.g. to the topic marketing, to the topic fitness or Travel.

One of the most common questions about E-E-A-T is whether you can have authority, trust, experience and expertise in different topics in the eyes of Google. E-E-A-T is a topic-based ranking concept. A too broad diversity of topics in the context of which a source entity is positioned dilutes the expertise and authority. The thematic environment appears inconsistent. To avoid dilution and inconsistency, one should think about a clear separation across the different representations of the entity (website, profiles …), as far as the thematic fields are semantically too far apart.

Theoretically, it is possible to score well in different topics with respect to E-E-A-T. Especially when topics are semantically adjacent. But it creates much more effort, because for each topic the positioning starts at zero. If the topics are too far apart, no synergy effects arise.

An SEO who also wants to position himself as a psychologist will have a hard time, because he has to build up two different identities in order to be perceived as an authority and expert in both areas. In addition, care should then also be taken to ensure that both identities are clearly segmented from one another in different ontologies with different profiles, digital representations and content.

Can we say that E-E-A-T is optimized by naming brand/person in context multiple times and thus offpage optimization becomes more important?

Yes. In the Quality Rater Guidelines, Google repeatedly points out to use external independent sources for the rating of the source entity and to be skeptical of one’s own statements.

Therefore, offpage optimization plays an important role far beyond link building. Co-occurrences, i.e. co-naming of the source entity as well as brand mentions in authoritative media as well as in search queries play an important role for the E-E-A-T evaluation. The more frequently these patterns are generated in an algorithmically recognizable manner, the better.

Does the UX (CWV) of a website influence EEAT?

The UX does not really play a role for E-E-A-T. Experience, expertise, authority and trust refer to the content creator’s knowledge, experience and authority. Content UX plays an indirect role for E-E-A-T, but functional and design UX do not.

If a source is not relevant enough for Wikipedia , what “EEAT” source can you use instead?

Many companies and authors are not relevant for Wikipedia. Besides Wikipedia, Google can use many other sources to assign the source entity to a thematic context. These can be, for example:

- About us page on the website

- Author profiles

- Speaker profiles

- Social media profiles

- Content in authoritative (professional) media

If you want to know more about Wikipedia’s relevance criteria contact us for a no obligation review.

Can Google “learn” & associate the entity with an author for a particular expertise area faster if the name is found alongside already established entities in the same expertise area on other websites?

Yes. Cooccurrences with other recognized entities in a topic area create a trustworthy environment in terms of authority and expertise.

Does the addition of Experience affect the other signals (Expertise, Authoritativeness, Trustworthiness)? After all, Expertise somehow includes Experience, right?

Experience and Expertise are not really different from each other. My personal opinion is that the second E (Experience) didn’t really bring any innovation to the E-E-A-T concept. Looking at the timing Google chose for the addition of Experience to E-E-A-T it was right in the emerging hype around ChatGPT in December 2022. I believe this introduction was more of a PR campaign to distract from the ChatGPT hype.

How can E-E-A-T be used for eCommerce where the author as a person plays a minor role? Is there meat on the bone (expertise) missing here in the mid-tail (category pages, blog articles, content marketing)?

E-E-A-T can be applied to any type of Source Entities. Source entities can be authors as well as companies or organizations and their digital representations like domains. Google points out in the Quality Rater Guidelines that if an author is unknown, the operator of the website should be used for a reputation rating.

What does generative AI, BARD/Palm2 have to do with E-E-A-T?

E-E-A-T can play an important role in the future both in training Google’s LLMs and outputting information about generative AI. On the one hand, Google will only use trusted sources as training data to train the LLMs. On the other hand, it will become increasingly important to position one’s own brand and product entities in these trusted ones in the respective thematic context in order to be identified as a thematic authority by the AI itself or to increase the statistical probability that how will be mentioned in the thematic outputs.

Currently, terms like GAIO or LLM Optimization are discussed here. However, these methods are viewed skeptically by the Data Science side. The problem: We don’t know which training data exactly Google will use for training LLMs like PaLM.

How important is E-E-A-T at Google?

The influence on rankings of signals that feed into the concept of E-AT rating has increased sharply since 2018. It is increasingly important for Google to rely on content from trusted and authoritative sources. In particular, the influence for YMYL topics and keywords is very high.

Is there an E-E-A-T score?

E-E-A-T is more of a concept than a concrete metric for evaluating websites and individual pieces of content. The E-E-A-T score is a myth. There is no single summary E-E-A-T score. According to Google, there is no single E-E-A-T score into which all signals are aggregated or added up. I can imagine that Google gets an overall impression of E-E-A-T of an author, publisher or website through many different algorithms. This overall impression is not so much a score, but an approximation of sample image for an entity that has E-E-A-T. Google could use selected sample entities to train the algorithms to produce a benchmark pattern for E-E-A-T. The more the entity resembles this pattern image across different signals, the higher the quality. More on this in the related posts I mentioned earlier.

According to which ranking signals does Google evaluate E-E-A-T?

Google give not much informartion here. Only to the signal page rank, so backlinks there is an indirect statements. It can be assumed that mainly offpage signals such as cooccurrences, backlinks, search patterns ... are included in the concept of the E-E-A-T evaluation.

More reading tips and videos on E-E-A-T assessment and YMYL

- Search Quality Evaluator Guidelines

- Entities and E-A-T: The role of entities in authority and trust

- E-A-T & TOPICAL BRAND POSITIONING AS A CRITICAL SUCCESS FACTOR IN SEO

- Lily Ray on E-A-T

- What is E-A-T by Marie Haynes

- Google Updates Quality Rater Guidelines Targeting E-A-T, Page Quality & Interstitials by Jennifer Slegg

- Why E-A-T & Core Updates Will Change Your Content Approach by Fajr Muhammad

- Google’s Core Algorithm Updates and The Power of User Studies: How Real Feedback From Real People Can Help Site Owners Surface Website Quality Problems by Glenn Gabe

- Google Author Rank: How Google Knows which Content Belongs to Which Author? Koray Tuğberk GÜBÜR

- Interesting Google patents for search and SEO in 2024 - 3. April 2024

- What is the Google Shopping Graph and how does it work? - 27. February 2024

- “Google doesn’t like AI content!” Myth or truth? - 19. February 2024

- Most interesting Google Patents for semantic search - 12. February 2024

- How does Google search (ranking) may be working today - 4. February 2024

- Success factors for user centricity in companies - 28. January 2024

- Social media has become one of the most important gatekeepers for content - 28. January 2024

- E-E-A-T: Google ressources, patents and scientific papers - 24. January 2024

- Patents and research papers for deep learning & ranking by Marc Najork - 21. January 2024

- E-E-A-T: More than an introduction to Experience ,Expertise, Authority, Trust - 4. January 2024