How does Google understands search terms by search query processing?

This article deals with the role of entities in the interpretation of search queries. I will go into some Google patents of the last few years and make deductions for todays search query processing.

Have fun while reading!

Contents

- 1 Search Query Processing Methodology

- 2 Google wants to recognize the meaning and search intent of the search

- 3 Classification of the search term in a thematic ontology

- 4 Rankbrain is an entity-based search query processor

- 5 Identification of entities in search queries (Named Entity Recognition)

- 6 Semantic enrichment of search queries

- 7 Refinement of search queries

- 8 OTHER EXCITING GOOGLE PATENTS RELATED TO SEARCH QUERY PROCESSING

- 9 FURTHER GOOGLE PATENTS FOR ENTITY-BASED INTERPRETATION OF SEARCH QUERIES

- 10 THE INTERACTION OF RANKBRAIN, BERT, MUM AND KNOWLEDGE GRAPH

Search Query Processing Methodology

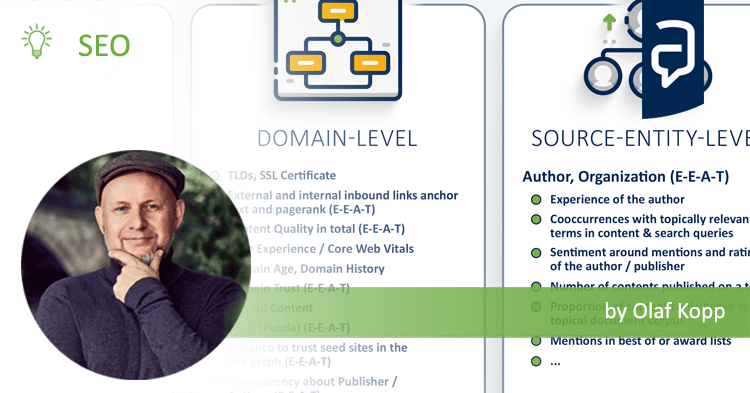

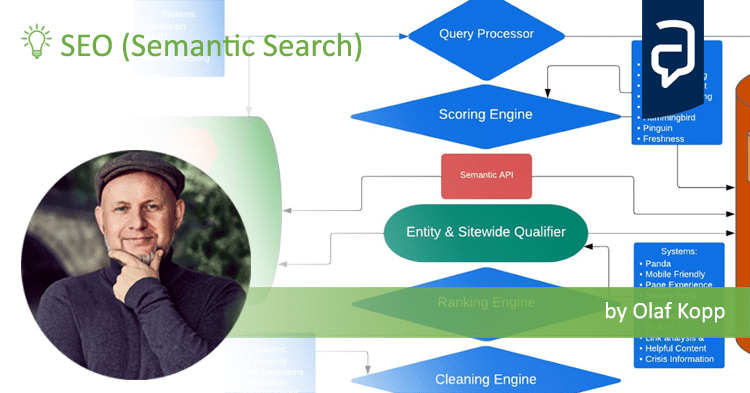

In semantic information retrieval systems, entities play a central role in several tasks.

- Understanding the search query (Search Query Processing)

- Relevance determination at document level (scoring)

- Evaluation at domain level or author level (E-A-T)

- Compilation of search results and SERP features

In all of these tasks, the interplay of named Entities and the composition of the individual terms of a search query with regard to determining the relevance of content are the core of search query processing.

The magic of interpreting search terms happens in query processing. The following steps are important here:

- Identification of the thematic ontology in which the search query is located. If the thematic context is clear, Google can select a content corpus of text documents, videos, images … as potentially suitable search results. This is particularly difficult with ambiguous search terms. More on that in my post KNOWLEDGE PANELS & SERPS FOR AMBIGUOUS SEARCH QUERIES.

- Identification of entities and their meaning in the search term (named entity recognition)

- Understanding the semantic meaning of a search query.

- Identification of the search intent

- Semantic annotation of the search query

- Refinement of the search term

I have deliberately distinguished between 2. and 3. here because, first, the search intent may vary depending on the user and may even change over time, but the semantic meaning remains the same.

For certain search queries, such as obvious misspellings, a query refinement takes place automatically in the background. As a user, you can also trigger the refinement of the search query manually, insofar as Google is not sure whether it is a typo. With query refinement, a search query is rewritten in the background in order to be able to better interpret the meaning.

Google wants to recognize the meaning and search intent of the search

queries In times of increasingly complex search queries, especially through voice search, the interpretation of search queries is one of the biggest challenges for search engines. For the utility of one Search engine is crucial to understand what the user is looking for. This is not an easy task, especially with unclearly worded search queries. Modern information retrieval systems are less and less about the Keywords that a search term contains rather than the meaning of the search query. Entities play a central role in many search queries.

Almost every search term is based on an implicit or explicit question and a search intent. The search intent is mostly implicit and not easy for a machine to identify. Recognizing the search intent is very important for the comparison with the purpose of documents or content of the respective thematic corpus. In times of voice search and mobile devices, it is all the more important for Google to search queries and their user intents or their meaning as precisely as possible in order to output individually suitable search results.

I don’t want to go into more detail about the search intent here. In this post, I want to focus on interpreting the meaning of search queries using entities.

In a 2009 interview, Ori Allon, then technical lead of the Google Search Quality team, said in an interview with IDG :

We’re working really hard at search quality to have a better understanding of the context of the query, of what is the query. The query is not the sum of all the terms. The query has a meaning behind it. For simple queries like ‘Britney Spears’ and ‘Barack Obama’ it’s pretty easy for us to rank the pages. But when the query is ‘What medication should I take after my eye surgery?’, that’s much harder. We need to understand the meaning…

This example shows the effectiveness of entity-based search systems in contrast to term-based ones.

A purely term-based search engine would not recognize the difference in meaning of the search queries “red stoplight” and “stoplight red” because the terms in the query are the same, just arranged differently. Depending on the arrangement, the meaning is different. An entity-based search engine recognizes the different context based on the different arrangement. “stoplight red” is its own entity while “red stoplight” is a combination of an attribute and an entity “stoplight”.

In this example it becomes clear that it is not enough to determine the entity via a term. The words and their arrangement in the environment also play an important role in identifying the meaning. Especially terms with different meanings depending on the context are a challenge for Google and search engines. More on that in the post Knowledge Panel and SERPs for ambiguous search queries.

Classification of the search term in a thematic ontology

The classification of a search query in a thematic context is the first step in query processing. Based on this classification, the search query can be further interpreted and a thematic corpus of relevant documents can be selected. In classic, non-semantic search engines, the keywords used in the search terms are compared with the terms typical of a cluster of topics in order to allocate the search query by topic. This process is relatively easy for most search engines insofar as the search terms can be assigned to a thematic context based on the terms used.

In semantic search engines, attempts are made to better understand the meaning behind the search query in order to be able to classify even ambiguous search queries.

It is more difficult with new terms or search queries. In 2015, Google introduced Rankbrain for the thematic assignment of new, previously unknown search queries.

Here is a Google patent on this:

Improving semantic topic clustering for search queries with word co-occurrence and bipartite graph co-clustering

Another scientific work from Google provides some interesting insights into how Google today probably divides search queries into different thematic areas.

This document introduces two methods that Google uses to contextualize search queries. So-called lift scores play a central role in word co-occurrence clustering:

“Wi” is in the formula for all terms that are closely related to the root of the word, such as misspellings, plural, singular or synonyms.

“a” can be any user interaction such as searching for a specific search term or visiting a specific page.

For example, if the lift score is 5, the probability that “Wi” will be searched is 5 times higher than that of “Wi” being searched in general.

“A large lift score helps us to construct topics around meaningful rather than uninteresting words. In practice the probabilities can be estimated using word frequency in Google search history within a recent time window.”

In this way, terms can then be assigned to specific entities such as Mercedes and/or the thematic context class “Auto” when searching for car spare parts. The context class and/or entity can then continue to be assigned terms that often occur as co-occurrences with the search terms. This is a quick way to create a cloud of terms for a specific topic. The height of the lift score determines the affinity to the topic:

“We use lift score to rank the words by importance and then threshold it to obtain a set of words highly associated with the context.”

This method can be used in particular when “Wi “ is already known, such as search terms for brands or categories that are already known. If “Wi” cannot be clearly defined because the search terms for the same topic are too different, Google could use a second method – “weighted bigraph clustering”.

This method is based on two assumptions.

- Users with the same intent formulate their search queries differently. However, search engines return the same search results.

- Conversely, URLs similar to a search query are displayed on the first search results.

With this method, the search terms are compared with the top-ranking URLs and query / URL pairs are formed, the relationship of which is also weighted according to the click rates of the users and impressions. In this way, similarities can also be created between search terms that do not have the same root and form semantic clusters from them.

Rankbrain is an entity-based search query processor

When Google announced the introduction of Rankbrain for better interpretation of search queries in 2015, there was a multitude of assumptions and opinions from the SEO industry. For example, there was talk of the hour of birth of machine learning or AI at Google. As in my post Google has been working intensively with deep learning since 2011 as part of the Google Brain project. However, Rankbrain was the first official confirmation from Google that machine learning is also used in Google search.

Probably the most precise information from Google about Rankbrain so far was available in the Bloomberg interview from October 2015.

A statement in the interview caused many misunderstandings about Rankbrain.

RankBrain is one of the “hundreds” of signals that go into an algorithm that determines what results appear on a Google search page and where they are ranked. In the few months it has been deployed, RankBrain has become the third-most important signal contributing to the result of a search query.

Many SEO media have interpreted the statement to mean that Rankbrain is one of the three most important ranking factors. But a signal is not a factor. Rankbrain has a major impact on how search results are selected and ranked, but is not a factor such as certain pieces of content or links.

There is another interesting statement in this interview:

RankBrain uses artificial intelligence to embed vast amounts of written language into mathematical entities — called vectors — that the computer can understand. If RankBrain sees a word or phrase it isn’t familiar with, the machine can make a guess as to what words or phrases might have a similar meaning and filter the result accordingly, making it more effective at handling never-before-seen search queries .

With Rankbrain, entities are identified in a search query and compared with the facts from the Knowledge Graph. Insofar as the terms are ambiguous, Google uses vector space analyzes to find out which of the possible entities best suits the term. The surrounding words in the search term are a first indication for determining the context. This may also be a reason why Rankbrain is used for long-tail search queries, among other things.

The problem in the pre-Rankbrain period was the lack of scalability when identifying and creating entities in the Knowledge Graph. The Knowledge Graph is currently still primarily based on information from Wikidata, which is verified by Wikipedia entities and Wikipedia itself – i.e. a manually maintained and therefore rather static and therefore not scalable system.

“Wikipedia is often used as a benchmark for entity mapping systems. As described in Subsection 3.5 this leads to sufficiently good results, and we argue it would be surprising if further effort in this area would lead to reasonable gains.” Source: From Freebase to Wikidata – The Great Migration

You can find more about this other articles here in the blog:

- How does Google process information from Wikipedia for the Knowledge Graph?

- How can Google identify and interpret entities from unstructured content?

Another highly recommended source for first-hand information on how Google Search works is Paul Haahr’s SMX West 2016 presentation, mentioned in another post.

Danny Sullivan asked Jeff in the Q&A how RankBrain might work to Determine document quality or authority. His answer:

This is all a function of the training data that it gets. It sees not just web pages but it sees queries and other signals so it can judge based on stuff like that.

The exciting thing about this statement is that it implicitly expresses that Rankbrain only intervenes after the selection of a first set of search results. This is an important finding because RankBrain doesn’t refine the search query before Google searches for results, only after.

Then RankBrain may be able to refine or interpret the query differently to select and re-order the results for that query.

summary: RankBrain is a deep learning-based algorithm that is used after the selection of an initial subset of search results and relies on text vectors and other signals to generate complex search queries about Natural Language Processing better.

In the following I would like to present some Google patents that can play a role in the interpretation of search queries. Some of the following patents could be the basic technology on which Rankbrain is built with its machine learning technologies.

Identification of acronym expansions

This patent was first August 2011 by Google October 2017 under a new name. It is very exciting as one of the inventors of the Deep learning specialist Thomas Strohmann is who played a leading role in the development of Rankbrain.

Entities are not mentioned in this patent, but it describes another method how Google rewrites or refines original search queries based on the information from a first subset of documents in order to display more relevant search results at the end. This patent is primarily about the interpretation of acronyms in search queries. However, the process described is a blueprint for many other methods that deal with the refinement or rewriting of search queries.

The acronym and synonym engine described in the patent can be replaced by the Knowledge Graph.

Query rewriting with entity detection

This patent was first September 2004 drawn by Google October 2017 under a new name. It’s particularly exciting because its roots predate Rankbrain, Hummingbird, and Knowledge Graph, and appear to still be relevant today, suggesting the methodology is a staple of today’s Google search.

The methodology describes the process of how search queries with an entity reference are rewritten if necessary or at least how suggestions are played out in order to obtain more relevant search results.

At first glance, the patent seems quite simple and rudimentary, since the descriptions of the search query in the examples given are only a supplement to the search queries Obtain search operators . For example, in one of the examples, the search query “mutual funds business week” becomes “mutual funds source:business week” unwritten. What I find exciting about the patent is that entity IDs are assigned via domains in the examples. That would feed the assumption that domains are unique digital images of entities.

In addition, it is described that the search query can also be rewritten for different terms with the same meaning, such as synonyms or misspellings, and that content is delivered as search results that match both the original and the rewritten search query.

Methods, systems, and media for interpreting queries

This patent, signed by Google in 2015 , describes various methods for how search engines can interpret search queries. It’s exciting because it combines methods from Rankbrain with Natural Language Processing (BERT & MUM).

This patent describes the basic problem and solution approaches to deal with the challenges.

For example, in response to providing the search query “action movie with tom cruise,” these search engines can provide irrelevant search results like “Last Action Hero” and “Tom and Jerry” simply because a portion of the search query is included in the title of the pieces of content. Accordingly, gaining an understanding of the search query can produce more meaningful search results.

With the method described here, search queries are broken down into individual sub-terms. These terms are checked to see if they are related to a known entity. An entity score is used to determine how close the terms are to the respective entities. The context of the search query, i.e. also the terms without direct entity reference in the search term, also plays a role. Based on the most relevant entity, a search is then performed according to the entity type and the corresponding search results are returned.

Here’s an example. A search query like “Action movie starring Tom Cruise” would be broken down into the separate components “Action”, “Movie”, “Tom” and “Cruise”. The term Action can be an entity of the entity type Movie, Genre or Series. Which of these entity types is most in demand can be determined, for example, based on the frequency or popularity of an entity score.

Depending on the type of search engine (image search, video search …), appropriate media formats would be delivered to match the searched entity type “Action Film” and the entity “Tom Cruise”. The patent refers to a wide variety of forms of searches, such as search queries in an image search or via voice search using a digital assistant.

Query generation using structural similarity between documents

This patent was signed in September 2016 . The exciting thing about this patent is that two important deep learning engineers, Paul Haahr and Yonhui Wu, were among the inventors. In addition, Yonhui Wu was one of the leading minds in the development of Rankbrain.

The patent describes a method of how a search engine can generate additional search queries for an entity in order to store it in a query store. These search queries could then be used, for example, to make search queries refinements or paraphrases of an original search term.

Based on the content structure of similar documents, search query refinements can be abstracted. For example, the sentence “Joseph Heller wrote the influential novel catch 22 about americain serviceman in WW2.” the search query “joseph heller catch” can be extracted.

Identification of entities in search queries (Named Entity Recognition)

Google wants to find out which entity a question is about. This process is also referred to as “named entity recognition”. This is not so easy if the entity itself does not appear in the search term. Google can identify the entity being searched for through the entities occurring in the search term and the relational context between entities.

An example:

The question “who is ceo at vw” or the implicit question “ceo vw” leads to the delivery of the following search results:

The knowledge panel, the one box, as well as the normal search results are aimed at the Herbert Diess entity, although this does not appear as a keyword in the search query. The similar questions are not directly related to the entity being searched.

In this example, two entities and a connection type or predicate phrase play an important role in answering the question.

- VW (entity)

- Boss (connection type)

- Herbert Diess (entity)

Google can only answer the question about the entity “Herbert Diess” by combining the entity “VW” with the connection type “Boss”.

That’s why Google attaches so much importance to identifying entities in the first step. As a central collection point for all terms identified as entities, Google uses the Knowledge Graph.

It is important for Google to identify entities in search terms and establish connections to other entities. At least as important are the relationships between the entities. Because only in this way can Google also implicit questions about entities and thus also concrete questions about entities directly in the SERPs . Here is another example:

The search query “Who is the founder of Adidas?” (explicit question) and “Founder Adidas” (implicit question) lead nearly to the same search results:

Google recognizes that the entity Adolf Dassler is being searched for here, although the name does not appear in the search query . It doesn’t matter whether I ask an implicit question in the form of the search term “Founder Adidas” or an explicit question. The entity “Adidas” and the relational context “Founder” are sufficient for this.

Below are some Google patents related to Named Entity Recognition.

Selecting content using entity properties

This patent was first redrawn by Google in April 2014 and under a new name in October 2017. It describes how Google can use a confidence score to determine the entity hierarchy in a search query.

To recognize the relevance of an entity in a search query, Google can use a confidence score.

The method can include identifying a property of the entity of the search query. The method can include the data processing system determining a match between a property of an entity of content selection criteria and the property of the entity of the search query. The method can include the data processing system selecting the content item as a candidate for display on the user device based on the match and the confidence score. Source: https://patents.google.com/patent/US9542450B1/en

According to this confidence score, it is then decided which is the main entity in the search query and the appropriate documents for the SERPs are determined accordingly. Entity attributes can also be included in the selection process using both a query graph and a content selection criteria graph.

Associating an entity with a search query

In this patent awarded to Google in 2016. it is about how an entity can be recognized in a search query or how Google can recognize that it is actually a search query with an entity reference. There is also another patent related to this patent 2013 release.

The patent describes the following process steps:

- receiving a search query

- identifying an entity associated with the search term

- providing an entity summary so that matching results can be displayed in response to the search query. The summary includes both relevant information about the entity and optional additional search queries.

- Identifying the optional entity search query and associating it with the selected option. (More on this in the patent ….)

- Identifying documents that match the entities or the entity

- Identifying further entities from the selected documents

- Linking the entity(ies) to the search query

- Determining a ranking for the entities

Providing search results based on a compositional query

This patent was signed by Google in 2012 and republished in an updated form in May 2021. It runs until 2035. The patent describes the process for returning search results to a search query with at least two related entities. Such a search query could be, for example, “Japanese restaurant near a bank”. The search intent behind this search query could be that the user would like to visit a Japanese restaurant and stop by a bank before or after.

Another search query could be “banks that went bankrupt during the economic crisis”. In contrast to the previous search query, this is not about a location but a temporal relationship between the entities.

The search queries can also be more complex and contain more than two entities.

Such complex search queries can only be answered by data recorded in a Knowledge Graph. An infinite number of references can be established between a wide variety of entities via the relationship information described by the edges. A classic tabular database such as SQL cannot answer such search queries in a meaningful way.

Semantic enrichment of search queries

It is not always easy for Google to interpret the relevant entities from the search queries . Search queries can be automatically enriched with additional semantic information or annotations in the background or suggested to the user via autosuggest. The matching of the search query and the entity no longer only takes place on the basis of the text entered, but also takes into account semantic relationships between entities and attributes.

The following example searches for the entity “Ann Dunham”. A purely term-based search engine would have problems answering the search query for “obama’s parents”. Through the interaction of term-based and entity-based search, the answer “Ann Dunham” can be output as the mother of the search engine.

In practice, the result looks like this. In addition to the mother Ann Dunham, the second searched entity of the father Barack Hussein Obama Senior as well as the knowledge panel of Barrack Obama is also given. Barrack Obama’s knowledge panel is displayed because the system reacts to the term Barack Obama in the search query. The other two entity boxes are output based on the additional semantic information from the Knowledge Graph.

The practical thing about this dual system when interpreting search queries is that results can also be returned if no entity is searched for in a search term.

In addition to the entity-based interpretation of search queries, the term-based method can also be supported by entity-type-based methods. This is relevant for queries that search for multiple entities from a type class, such as “Hannover sights”. Here a box is output that lists several entities.

Search queries are often related to a Entity type either the currently most relevant entity, carousels, or the type of aboveKnowledge Graphboxes. The most relevant entities are displayed in such boxes depending on their weighting in relation to the search query. Similar to documents, Google can determine the proximity or relevance of the entities to the search queryv vector space analysis such as Word2Vec. The smaller the angle between the search query vector and the entity vector, the more relevant or closer the term and entity are.

Refinement of search queries

Search engines can use search query refinement or query refinement to rewrite search terms in order to give the search query a more precise Assign meaning and deliver corresponding search results. This can take place in the background without the user noticing or be actively triggered by the user. Particularly in the case of search queries that are aimed very generically at an entity, it is often unclear what information the user wants to find. Up to now, the most important data about an entity is displayed in the Knowledge Panel in a standardized way, aligned to the respective entity type. (For more information, see my article How Google creates Knowledge Panels?) Nowadays the user can also use buttons to actively specify more precisely which media formats and information he would like to see for the entity. Thereby, the search queries are obviously rewritten by clicking on the button.

Below are some Google patents related to query refinement, i.e. refining or rewriting search queries.

The publication date of the first patent shows that Google has been dealing with this complex topic for a very long time. It is one of Google’s first patents for the semantic interpretation of search terms and was created by Ori Allon in 2009.

Refining search queries

The publication date of this patent shows that Google has been dealing with this complex topic for a very long time. It is one of Google’s first patents for the semantic interpretation of search terms and was created by Ori Allon in 2009.

The patent primarily deals with the interpretation of search queries and their refinement. According to the patent, the refinement of the search query refers to certain entities that often appear together in the documents that rank for the original search query or synonyms. As a result, entities can be mutually referenced and previously unknown entities can thus be quickly identified.

Clustering query refinements by inferred user intent

This patent was first drawn by Google in March 2016 and re-drawn in October 2017 under an updated name. It describes a method how Google creates refinements of search queries to find out even better what the user is really looking for. These refinements are the related search queries that appear below the search results.

These refinements are especially important when user intent is unclear, in order to play out more relevant results and related search queries. For this purpose, all possible search query refinements are organized into clusters that map the different aspects related to the original search query. These aspects require different search results.

The clustering of search queries is solved using a graph construct. This takes into account session-dependent relationships of search terms to each other, as well as the query of specific documents. The graph effectively considers both content-based similarity and co-occurrence similarity of sessions determined from query logs. That is, it analyzes co-occurrences of consecutive search queries as well as click behavior.

In the graph, the original query as well as refinements and matching documents are represented as nodes. Additional nodes represent the related search queries occurring as co-occurrences within sessions. All of these nodes are connected by edges.

Clusters of search query refinements and documents can be formed via visit probability vector.

Regarding the related queries, there is an interesting passage in the patent that describes how the related queries are generated:

Related queries are typically mined from the query logs by finding other queries that co-occur in sessions with the original query. Specifically, query refinements, a particular kind of related queries, are obtained by finding queries that are most likely to follow the original query is a user session. For many popular queries, there may be hundreds of related queries mined from the logs using this method. However, given the limited available space on a search results page, search engines typically only choose to display a few of the related queries.

OTHER EXCITING GOOGLE PATENTS RELATED TO SEARCH QUERY PROCESSING

Below are more exciting Google patents that I have discovered related to modern Search Query Processing, but cannot be clearly assigned to any of the above sub-steps.

Discovering entity actions for an entity graph

This patent was first drawn by Google in January 2014 and redrawn under an updated name in October 2017. It describes how Google identifies entity-related activities based on situational short-term fluctuations in search volume. These can then be added to a knowledge panel in the short term, for example, or content that takes these current activities into account can be temporarily pushed up in the rankings. This way, Google can react to situational events around an entity and adjust the SERPs accordingly.

Identifying and ranking attributes of entities

This Google patent published in 2015 is probably one of the most exciting patents on this topic. It describes how Google determines search query data, entity descriptive parts and attachments for each search term. These attachments can be attributes, for example. In this way, search queries can be analyzed to determine what types of information users typically want to receive.

In many cases, the types of information a user is interested in for an entity (e.g., a person or a topic) differ. By analyzing search queries, the most frequently requested information for a given entity can be identified. Then, when a user sends a search query related to the particular entity, the most frequently requested information can be provided in response to the query. For example, a brief summary of the most frequently requested facts for the particular entity and other similar entities can be provided such as in a Knowledge Panel or One Box.

This takes into account how often specific information is searched for the entity itself as well as for the entity type in general. For example, if the band Phoenix is frequently searched for albums, lyrics and tour dates, and in general for musicians for band members and songs, this results in the information provided in the Knowledge Panel.

Learning from User Interactions in Personal Search via Attribute Parameterization

The scientific paper “Learning from User Interactions in Personal Search via Attribute Parameterization” was published in 2017. It describes how Google could establish semantic attribute relationships between search queries and the documents clicked on by analyzing user behavior with individual documents and even support a self-learning ranking algorithm:

„The case in private search is different. Users usually do not share documents (eg, emails or personal files), and therefore directly aggregating interaction history across users becomes infeasible. To address this problem, instead of directly learning from user behavior for a given [query, doc] pair like in web search, we instead choose to represent documents and queries using semantically coherent attributes that are in some way indicative of their content.

This approach is schematically described in Figure 2. Both documents and queries are projected into an aggregated attribute space, and the matching is done through that intermediate representation, rather than directly. Since we assume that the attributes are semantically meaningful, we expect that similar personal documents and queries will share many of the same aggregate attributes, making the attribute level matches a useful feature in a learning-to-rank model.“

Evaluating semantic interpretations of a search query

This Google patent was presented in July 2019 and can be understood as a further development or addition to Rankbrain. It describes a method how different semantic meanings can be determined for a search query. Thereby, each semantic interpretation is linked to another canonical search query. The current search query is modified based on this and the canonical search term.

Similar to the aforementioned patent regarding semantic annotations, the modified search terms are already annotated with a semantic meaning. By comparing the search results for the original search query and those for the modified one, possible semantic meanings can be both validated and weighted in relation. The degree of similarity between the search results determines proximity. The degree of similarity is based on the frequency of occurrence of certain keywords associated with the particular search query in the modified search results. For example, a greater frequency of keyword occurrence may indicate that the modified search query is more likely to match the semantic meaning.

In some cases, other data, such as user click-through rate, website traffic data, or other data, may be used to interpret a likely semantic interpretation.

Query Suggestions based on entity collections of one or more past queries

Another Google patent from July 2019 describes a method for Google Suggest to generate suggestions from past search queries. Entities play a role here as well. Entities that are related to entities appearing in past Suggest suggestions or entities searched in the past are the basis for determining a similarity metric for determining new Suggest suggestions.

FURTHER GOOGLE PATENTS FOR ENTITY-BASED INTERPRETATION OF SEARCH QUERIES

I will not go into more detail about the following additional Google patents. But I do not want to leave them unmentioned here:

THE INTERACTION OF RANKBRAIN, BERT, MUM AND KNOWLEDGE GRAPH

I think it is clear that Google is becoming more and more of an ad-hoc answering machine and therefore the meaning of a search query is becoming more important than the purely keyword-based interpretation of search queries.

Current information retrieval methods are limited to the terms that appear in the search query and match them with content in which the individual terms appear, without considering a contextual connection of the terms to each other and determining the actual meaning.

Innovations such as Rankbrain, BERT and MUM focus on identifying searched entities by matching them with an entity database (knowledge graph), identifying a context via the relationship of the relevant entities to each other, and using this to identify the meaning of search queries and documents. For normalization and simplification, search queries are refined and rewritten and provided with semantic comments.

This rethinking of information retrieval at Google was the reason for the introduction of the Knowledge Graph, the Hummingbird Update in 2013, and Rankbrain in 2015. These series of innovations are directly related to each other or intertwine in terms of process.

- Rankbrain is responsible for interpreting search queries in terms of synonymity, ambiguity, meaning (intent) and significance (extension). Rankbrain is the central element in query processing, especially with regard to new search queries, long-tail keywords, ambiguous terms, and the handling of processes in the context of voice search and digital assistants.

- The entities and facts recorded in the Knowledge Graph, such as attributes and relationships between entities, can be used both in determining the relevance of results and in query processing, for example, to enrich documents and search queries with facts from the Knowledge Graph via annotations. This makes it possible to better interpret the meaning of search queries as well as documents or individual paragraphs and sentences in content.

- The Hummingbird algorithm is responsible for clustering content according to meaning and purpose into different corpuses and for evaluating or scoring the results in terms of relevance, i.e. ranking.

The unifying element in all three modules are entities. They are the lowest common denominators. Vector space analysis, word embeddings and natural language processing play a central role in Rankbrain, BERT and MUM. Advances in Machine Learning allow Google to use these methods more and more performantly to evolve from a purely text-based search engine to a semantic search engine.

Things not strings!

- The dimensions of the Google ranking - 25. April 2024

- Interesting Google patents for search and SEO in 2024 - 3. April 2024

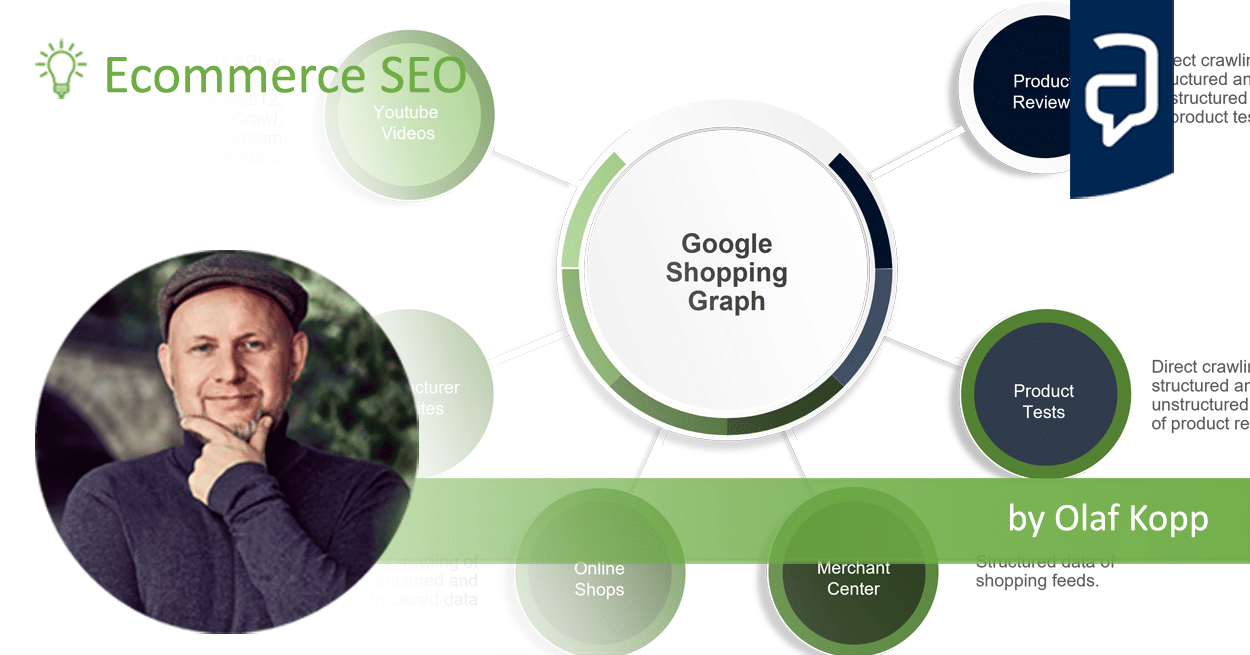

- What is the Google Shopping Graph and how does it work? - 27. February 2024

- “Google doesn’t like AI content!” Myth or truth? - 19. February 2024

- Most interesting Google Patents for semantic search - 12. February 2024

- How does Google search (ranking) may be working today - 4. February 2024

- Success factors for user centricity in companies - 28. January 2024

- Social media has become one of the most important gatekeepers for content - 28. January 2024

- E-E-A-T: Google ressources, patents and scientific papers - 24. January 2024

- Patents and research papers for deep learning & ranking by Marc Najork - 21. January 2024

Issa

21.09.2022, 10:29 Uhr

Hello Sir,

Please noticed, all the images on this webpage are not displayed – FYI

Keep up the good work

Issa

Olaf Kopp

21.09.2022, 10:36 Uhr

THX! I will fix it!