Insights from the Whitepaper “How Google fights misinformation” on E-A-T and Ranking

In 2019, Google presented a very interesting whitepaper titled “How Google Fights Disinformation” at the Munich Security Conference. This whitepaper is similar important like the Quality Rater Guidelines and contains many interesting statements. It mentions many references to E-A-T rating, Quality Rater Guidelines, Page Rank and Entities, which I summarize and interpret for you in this article. This article in german >>> https://www.sem-deutschland.de/blog/e-a-t-fehlinformationen/

Contents

What is the whitepaper “How Google Fights Disinformation” about?

In the whitepaper, Google presents its own measures for dealing with misinformation. This is Google’s response to the criticism in recent years that Google, Facebook and others are doing too little to combat the spread of fake news. With this whitepaper and the information explained in it, Google shows how important the topic is to them and how Google is facing up to its social responsibility.

However, like other communication channels, the open Internet is vulnerable to the organized propagation of false or misleading information. Over the past several years, concerns that we have entered a “post-truth” era have become a controversial subject of political and academic debate.

These concerns directly affect Google and our mission – to organize the world’s information and make it universally accessible and useful. When our services are used to propagate deceptive or misleading

information, our mission is undermined. How companies like Google address these concerns has an impact on society and on the trust users place in our services. We take this responsibility very seriously and believe it begins with providing transparency into our policies, inviting feedback, enabling users, and collaborating with policymakers, civil society, and academics around the world.

The whitepaper distinguishes between the measures in Google Search, Google News and Youtube. To begin with, a distinction is made between misinformation, disinformation and fake news. Misinformation is a statement that is unconsciously made incorrectly. Whereas disinformation and fake news are information that is knowingly spread incorrectly. The white paper focuses on this intentionally disseminated false information.

Statements on the general evaluation

From the first chapter is also already the first remarkable statement:

The entities that engage in disinformation have a diverse set of goals. Some are financially motivated, engaging in disinformation activities for the purpose of turning a profit… Levels of funding and sophistication vary across those entities, ranging from local mom-and-pop operations to well-funded and state-backed campaigns… Sometimes, a successful disinformation narrative is propagated by content creators who are acting in good faith and are unaware of the goals of its originators.

Google speaks here of entities in terms of editors or publishers or authors.

We have an important responsibility to our users and to the societies in which we operate to curb the efforts of those who aim to propagate false information on our platforms.

Here, Google once again emphasized its own responsibility to the users of its offerings and to society.

This complexity makes it difficult to gain a full picture of the efforts of actors who engage in disinformation or gauge how effective their efforts may be. Furthermore, because it can be difficult to determine whether a propagator of falsehoods online is acting in good faith, responses to disinformation run the risk of inadvertently harming legitimate expression.

The evaluation of these entities or their published information seems to be complex. That is why it cannot be ruled out that legitimate statements may also come under scrutiny.

Easy access to context and a diverse set of perspectives are key to providing users with the information they need to form their own views. Our products and services expose users to numerous links or videos in response to their searches, which maximizes the chances that users are exposed to diverse perspectives or viewpoints before deciding what to explore in depth.

Here, Google emphasizes that they by no means allow only one opinion, but want to show users as many perspectives on a topic as possible to help them form their own opinions.

Our work to address disinformation is not limited to the scope of our products and services. Indeed, other organizations play a fundamental role in addressing this societal challenge, such as newsrooms, fact-checkers, civil society organizations, or researchers.

Here it is pointed out that Google not only relies on its own resources and algorithms, but also works with external organizations such as newsrooms, fact-checkers, civic social organizations and researchers to verify the accuracy of information.

Partnering with Poynter’s International Fact-Checking Network (IFCN) , a nonpartisan organization gathering fact-checking organizations from the United States, Germany, Brazil, Argentina, South Africa, India, and more.

Here Google becomes a bit more concrete and names the Fact Checking Network of Poynters as a partner, which consists of organizations from America, Germany, Brazil, Argentina and South Africa, India …. A look into the Google Fact Check Explorer gives a first impression about which organizations play a role. Correctiv and Presseportal, among others, are very active here. Here is a screenshot from the tool on the topic of “Corona”:

Making it easier to discover the work of fact-checkers on Google Search or Google News, by using labels or snippets making it clear to users that a specific piece of content is a fact-checking article.

This statement can be understood in the Google Fact Checker or the screenshot above. Thus, the ratings whether wrong or right with reasoning to the information is additionally indicated.

Knowledge” or “Information” Panels in Google Search and YouTube, providing high-level facts about a person or issue.

In these statements, Google emphasizes the validity of the facts in the Knowledge Panels, Knowledge Cards and Featured Snippets. But that there is still room for improvement can be seen time and again.

We try to make sure that our users continue to have access to a diversity of websites and perspectives. Google Search and Google News take different approaches toward that goal.

Here Google emphasizes once again that diversity in terms of websites and perspectives on a topic is important to them in Google Search and Google News.

Google Search: contrary to popular belief, there is very little personalization in Search based on users’ inferred interests or Search history before their current session. It doesn’t take place often and generally doesn’t significantly change Search results from one person to another. Most differences that users see between their Search results and those of another user typing the same Search query are better explained by other factors such as a user’s location, the language used in the search, the distribution of Search index updates throughout our data centers, and more. Furthermore, the Top Stories carousel that often shows in Search results in response to news-seeking searches is never personalized.

Here Google emphasizes that the personalization of search results are only minimally personalized based on interests and search history. The most common form of personalization takes place based on the searcher’s location, beta algorithm tests and set language. The results displayed in the news carousel are never personalized.

We are aware that many issues remain unsolved at this point. For example, a known strategy of propagators of disinformation is to publish a lot of content targeted on “data voids”, a term popularized by the U.S. based think tank Data and Society to describe Search queries where little high-quality content exists on the web for Google to display due to the fact that few trustworthy organizations cover them. This often applies, for instance, to niche conspiracy theories, which most serious newsrooms or academic organizations won’t make the effort to debunk. As a result, when users enter Search terms that specifically refer to these theories, ranking algorithms can only elevate links to the content that is actually available on the open web – potentially including disinformation.

Here Google addresses the difficulty of not displaying content on conspiracy theories, since many of these theories are not covered by trustworthy sources. Thus, no content from these sources can rank above the dubious sources.

Our approach to tackling disinformation in our products and services is based around a framework of three strategies: make quality count in our ranking systems, counteract malicious actors, and give users more context.

Google describes three main strategies to detect and exclude disinformation:

- Ranking content quality assessment

- Penalizing malicious sources

- Providing context for the user

Statements about ranking in general

Regarding point 1, Google writes the following:

These algorithms are geared toward ensuring the usefulness of our services, as measured by user testing, not fostering the ideological viewpoints of the individuals that build or audit them.

Here Google emphasizes that the ranking algorithms should ensure usefulness for the user. This is done through user testing without regard to ideological views of the creators and reviewers. This may indicate that algorithmic evaluation of user signals could be used.

Over the course of the past two decades, we have invested in systems that can reduce ‘spammy’ behaviors at scale, and we complement those with human reviews.

Here Google highlights that they have done a lot in the last 20 years to combat spam content on a large scale and support it with human reviews. This could mean quality raters, employees of Google’s web spam team or external experts.

However, there are clear cases of intent to manipulate or deceive users. For instance, a news website that alleges it contains “Reporting from Bordeaux, France” but whose account activity indicates that it is operated out of New Jersey in the U.S. is likely not being transparent with users about its operations or what they can trust it to know firsthand.

Here Google gives an example of how to check the credibility of a content. A source that pretends to give first-hand information, but in reality is only second or third hand. The issue here is transparency.

Here it is pointed out that Google takes into account the context and expectation of the user when ranking.

Google Search aims to make information from the web available to all our users. That’s why we do not remove content from results in Google Search, except in very limited circumstances. These include legal removals, violations of our webmaster guidelines, or a request from the webmaster responsible for the page.

Here, the statement is that Google will not remove any content from the index unless it is a legal violation, a violation of the Webmaster Guidelines, or instructions from the author or website owner themselves.

They use algorithms, not humans, to determine the ranking of the content they show to users. No individual at Google ever makes determinations about the position of an individual webpage link on a Google Search or Google News results page… Our algorithms are geared toward ensuring the usefulness of our services, as measured by user testing, not fostering the ideological viewpoints of the individuals who build or audit them.

Here’s confirmation once again that no single person is responsible for scoring content for Google Search and Google News rankings.

Because Google News does not attempt to be a comprehensive reflection of the web, but instead to focus on journalistic accounts of current events, it has more restrictive content policies than Google Search. Google News explicitly prohibits content that incites, promotes, or glorifies violence, harassment, or dangerous activities. Similarly, Google News does not allow sites or accounts that impersonate any person or organization, that misrepresent or conceal their ownership or primary purpose, or that engage in coordinated activity to mislead users.

With regard to Google News, Google writes that stricter rules are applied here than in Google Search, since Google News does not represent an image of the complete Internet, but refers primarily to current events.

For instance, ranking in Google News is built on the basis of Google Search ranking and they share the same defenses against “spam” (attempts at gaming our ranking systems)

An exciting statement. The ranking of content in Google search seems to be based on the same basis as the classic Google search. At least as far as spam-fighting is concerned.

Our algorithms can detect the majority of spam and demote or remove it automatically. The remaining spam is tackled manually by our spam removal team, which reviews pages (often based on user feedback) and flags them if they violate the Webmaster Guidelines. In 2017, we took action on 90,000 user reports of search spam and algorithmically detected many more times that number.

Here Google very confidently states that most spam is automatically detected and removed. The spam not detected by the algorithms is removed by the Search Quality Team. The detection runs via flags, which are assigned on the basis of user feedback or violations of the Webmaster Guidelines. Thus, in 2017, about 90,000 user feedbacks on search spam were handled. Algorithmically many times more.

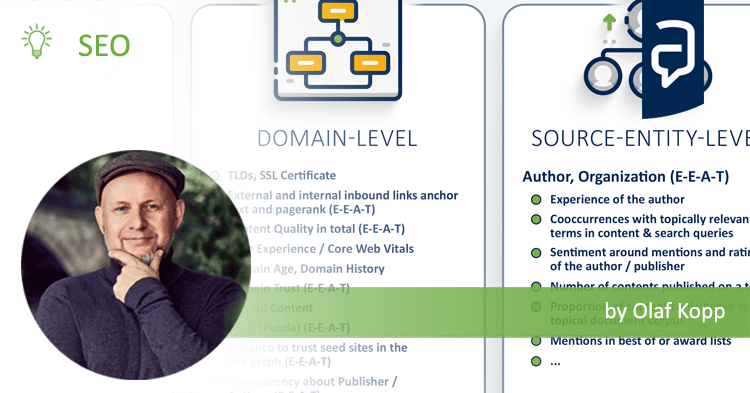

Statements on E-A-T and the Quality Rater Guidelines

The systems do not make subjective determinations about the truthfulness of webpages, but rather focus on measurable signals that correlate with how users and other websites value the expertise, trustworthiness, or authoritativeness of a webpage on the topics it covers.

Throughout the document, authority and trustworthiness are repeatedly written about and at this point E-A-T is directly referred to. It is referred to that because of measurable objective signals that correlate with the E-A-T rating in relation to the particular topic by users and other websites. In a nutshell. E-A-T always has a topic reference and in this case is referred to not a document, but the entire source.

To perform these evaluations, we work with Search Quality Evaluators who help us measure the quality of Search results on an ongoing basis. Evaluators assess whether a website provides users who click on it with the content they were looking for, and they evaluate the quality of results based on the expertise, authoritativeness, and trustworthiness of the content… The resulting ratings do not affect the ranking of any individual website, but they do help us benchmark the quality of our results, which in turn allows us to build algorithms that globally recognize results that meet high-quality criteria.

Here, Google addresses the E-A-T rating described in the Quality Rater Guidelines by search evaluators, who are supposed to rate search results in their entirety to identify general patterns of good/trustworthy and bad content that can then be incorporated into algorithmic scoring of individual pieces of content.

We continue to improve on Search every day. In 2017 alone, Google conducted more than 200,000 experiments that resulted in about 2,400 changes to Search. Each of those changes is tested to make sure it aligns with our publicly available Search Quality Rater Guidelines, which define the goals of our ranking systems and guide the external evaluators who provide ongoing assessments of our algorithms.

Here Google gets a bit more specific and gives concrete figures on algorithm development. Very exciting is the statement that there is a direct connection between Google’s ranking algorithms and the information from the Quality Rater Guidelines. So far, Google has not made such a clear statement.

“Ranking algorithms are an important tool in our fight against disinformation. Ranking elevates the relevant information that our algorithms determine is the most authoritative and trustworthy above information that may be less reliable. These assessments may vary for each webpage on a website and are directly related to our users’ searches. For instance, a national news outlet’s articles might be deemed authoritative in response to searches relating to current events, but less reliable for searches related to gardening.”

Google again indirectly mentions E-A-T at this point and emphasizes that the ranking algorithms guarantee that the most reliable and relevant content is found best. Here, the E-A-T rating refers to the specific content.

We use ranking algorithms to elevate authoritative, high-quality information in our products.

Authority again. The A in E-A-T.

Our ranking system does not identify the intent or factual accuracy of any given piece of content. However, it is specifically designed to identify sites with high indicia of expertise, authority, and trustworthiness.

Here again a reference to E-A-T. Here Google also writes that the E-A-T rating does not refer to the content but to the sources or website itself.

For most searches that could potentially surface misleading information, there is high-quality information that our ranking algorithms can detect and elevate… For these “YMYL” pages, we assume that users expect us to operate with our strictest standards of trustworthiness and safety. As such, where our algorithms detect that a user’s query relates to a “YMYL” topic, we will give more weight in our ranking systems to factors like our understanding of the authoritativeness, expertise, or trustworthiness of the pages we present in response.

Here, Google limits the measures described in terms of E-A-T to those search queries where misleading information could appear. More specifically, on search queries that require the so-called Your-Money-Your-Life content as answers.

To reduce the visibility of this type of content, we have designed our systems to prefer authority over factors like recency or exact word matches while a crisis is developing. In addition, we are particularly attentive to the integrity of our systems in the run-up to significant societal moments in the countries where we operate, such as elections.

This fact currently affects the SERPs very strongly because of Corona. During crises, Google weights the signals according to the E-A-T rating so highly for relevant search queries that these are more important than traditional ranking factors at the document level, such as keyword matching or timeliness.

Google’s algorithms identify signals about pages that correlate with trustworthiness and authoritativeness. The best known of these signals is PageRank, which uses links on the web to understand authoritativeness.

Here Google mentions one of the signals that can be used for an evaluation according to E-A-T. The good old page rank or links.

We take additional steps to improve the quality of our results for contexts and topics that our users expect us to handle with particular care.

Conclusion: E-A-T, Quality Rater Guidelines and older concepts like PageRank play a central role

Google is aware of the responsibility they offer with their services Search, News and Youtube. The credibility and accuracy of the content is the top priority, along with relevance and usefulness for the user. Especially at the current Corona crisis, Google seems to rely very strongly on the principles and measures from this white paper. The temporal proximity between the publication of the whitepaper and the numerous Google (core) updates in 2019 suggests a relationship.

In summary, the following key messages can be derived from the whitepaper:

- Google would like to maintain the balance of diversity of content and sources and focus on trustworthy sources

- The criteria expertise, authority and trustworthiness play a central role in the evaluation of content and its sources. Which signals play a role for the evaluation remains unclear except for links or page rank. More on this in my article on Search Engine Land 14 ways Google may evaluate E-A-T.

- The Quality Rater Guidelines form a model on which Google bases its ranking and evaluation algorithms.

- Individual search results are not evaluated by humans in terms of ranking. Only the SERPs in their entirety and exemplary bad and good content are evaluated by search evaluators to identify patterns of typical quality criteria, which can then play a role in the ranking algorithm.

- Google News and Google Search rankings are based on the same algorithms. In Google News, the E-A-T evaluation criteria are weighted more heavily.

- For searches on YMYL topics, the E-A-T evaluation criteria are weighted more heavily.

- For crises and special events such as elections, the evaluation criteria according to E-A-T are the most important ranking factor.

- Fact checkers such as Poynters Fact Checking Network organizations play a key role in providing users with necessary context to a piece of content. These ratings could also be used to classify sources or authors and publishers according to credibility.

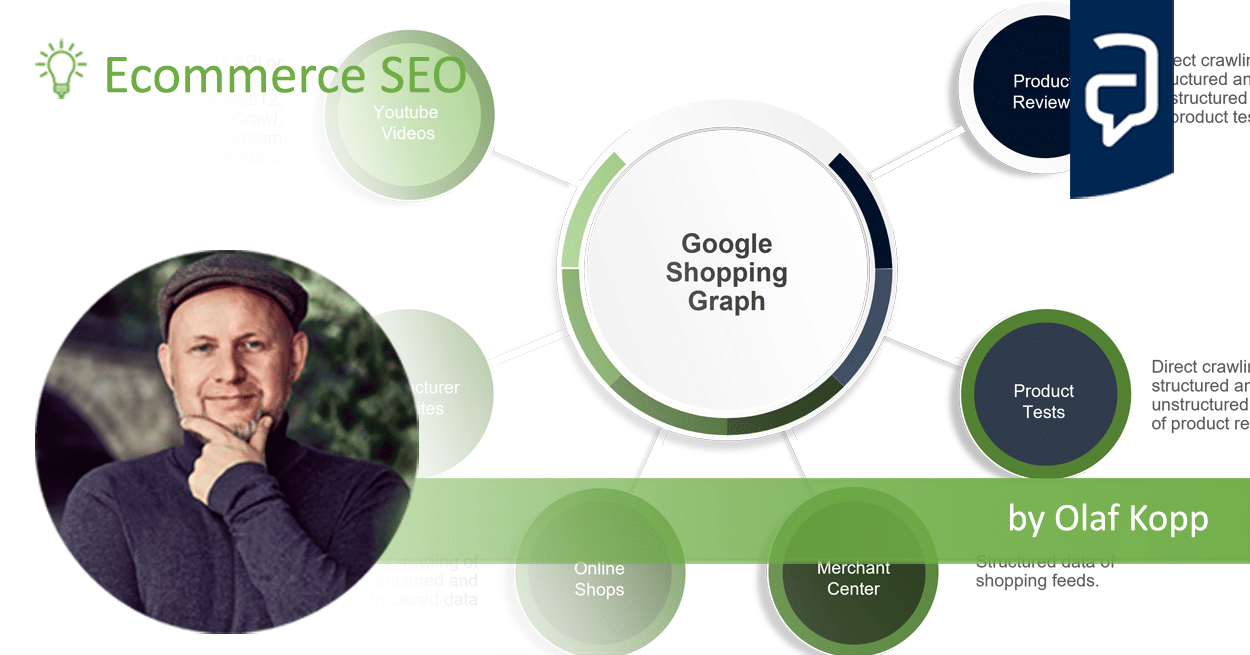

- The dimensions of the Google ranking - 25. April 2024

- Interesting Google patents for search and SEO in 2024 - 3. April 2024

- What is the Google Shopping Graph and how does it work? - 27. February 2024

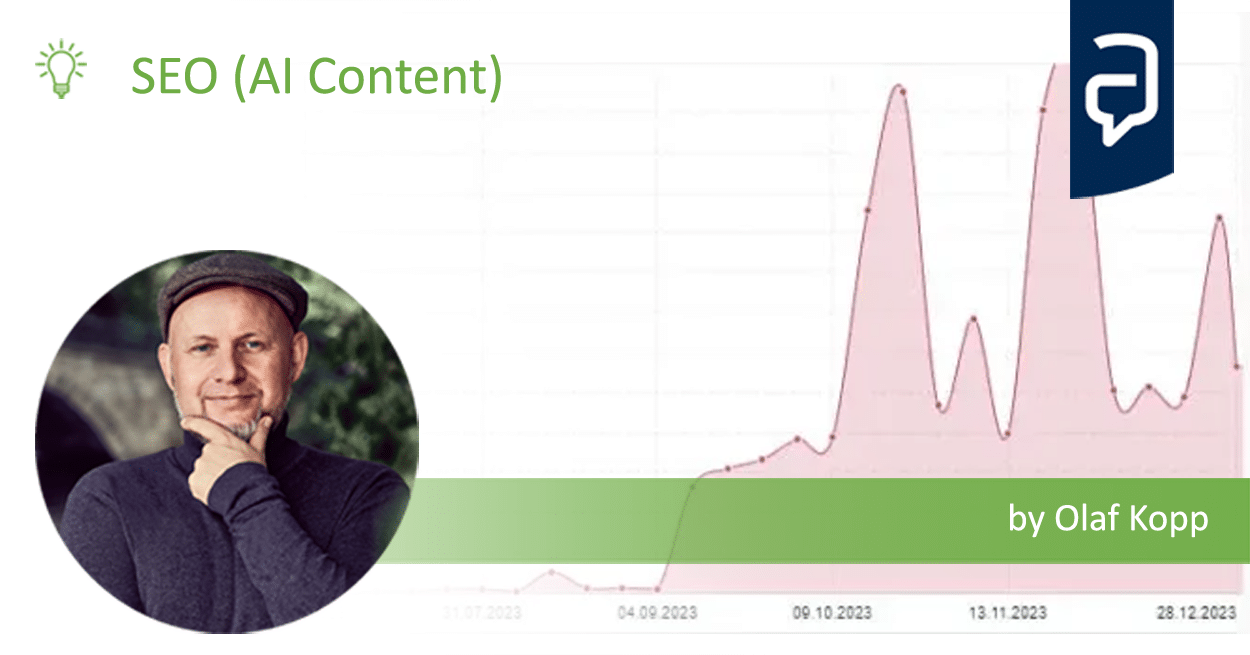

- “Google doesn’t like AI content!” Myth or truth? - 19. February 2024

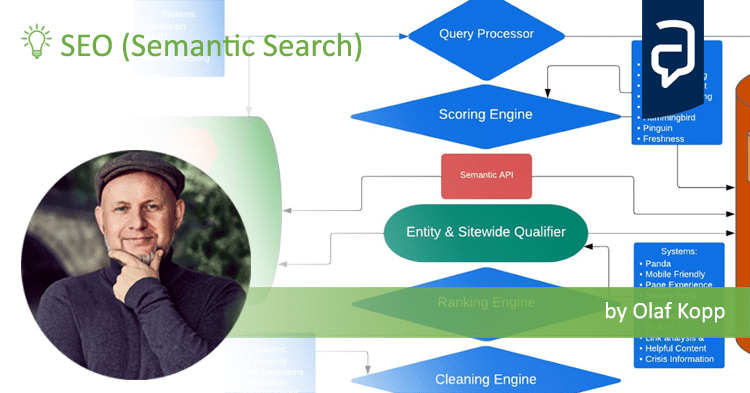

- Most interesting Google Patents for semantic search - 12. February 2024

- How does Google search (ranking) may be working today - 4. February 2024

- Success factors for user centricity in companies - 28. January 2024

- Social media has become one of the most important gatekeepers for content - 28. January 2024

- E-E-A-T: Google ressources, patents and scientific papers - 24. January 2024

- Patents and research papers for deep learning & ranking by Marc Najork - 21. January 2024