How Google can identify and interpret entities from unstructured content?

Knowledge databases like the Knowledge Graph challenge Google with the task of balancing completeness and accuracy of information. A necessary condition for completeness is that Google is able to identify, interpret and extract information in unstructured data sources. More on this in this article. Here is the german article >>> https://www.sem-deutschland.de/blog/informationen-entitaeten-websites/

Contents

- 1 Google’s journey to semantic understanding

- 2 The problem with knowledge databases like Wikipedia and Wikidata

- 3 Closed vs. open extraction of information

- 4 Tail entity detection

- 5 Named Entity Recognition

- 6 Extraction of events

- 7 Machine Learning as a central technology for processing unstructured data

- 8 Assignment of new entities to classes and types via Unsupervised Machine Learning

- 9 Methods for ensuring topicality

- 10 The Knowledge Vault as Knowledge Graph 2.0

- 11 Conclusion: Google is only at the beginning in the extraction of unstructured information

Google’s journey to semantic understanding

The concern of extracting semantic information about objects or entities from unstructured documents has occupied Google since the late 1990s. One can find a Google patent from 1999 with the title Extracting Patterns and Relations from Scattered Databases Such as the World Wide Web (pdf). It is one of the first Google patents on semantic issues ever.

The first step of the Knowledge Graph was the extraction of structured and semi-structured data. Here Google is already quite good at extracting and processing information from e.g. Wikipedia or Wikidata former freebase. More about this in the articles How does Google process information from Wikipedia for the Knowledge Graph?.

But this was only the beginning, as the limitations of such a methodology are obvious.

The problem with knowledge databases like Wikipedia and Wikidata

Since Wikidata and Wikipedia have captured only a fraction of all entities in the real world, the most difficult task for Google is to extract information about entities and entity types from other websites besides the ones mentioned above. Most websites and documents are all built differently and usually do not have a uniform structure. Therefore, Google still has a big task ahead of it to further expand the Knowledge Graph.

Structured and semi-structured information from manually maintained data sources such as Wikipedia or Wikidata are often checked and prepared in such a way that Google can easily extract them and add them to the Knowledge Graph. But these websites and databases are not perfect either.

The problem with manually maintained databases and semistructured websites like Wikipedia is the lack of completeness, validity and topicality of the data.

- Completeness means on the one hand related to the entities recorded in a database per se, and on the other hand related to their attributes and assigned entity types.

- Validity refers to the correctness of the recorded attributes, statements or facts.

- Topicality refers to the attributes of the saved entities.

It is validity and completeness that are in tension with each other. If Google relies only on Wikipedia, the validity of the information is very high due to the rigorous scrutiny of the eager Wikipedians. When it comes to timeliness, it becomes more difficult, and when it comes to completeness, the information is simply not enough, since Wikipedia only represents a fraction of the world’s knowledge.

To achieve the goal of approximating completeness, Google must be able to extract unstructured data from websites while also paying attention to validity and timeliness. For example, articles in Google News represent a very interesting source of information to ensure the timeliness of entities already captured in the Knowledge Graph.

Google has a huge pool of knowledge at its disposal through the trillions of indexed content or documents. These can be news sites, blogs, magazines, reviews, stores, glossaries, dictionaries … …

But not every source of information is valid enough to be useful as a source of information. Therefore, the first step is to identify the right domain as a source.

By identifying the mentions of entities already stored in the Knowledge Graph, entity-relevant documents can be identified in the first step.

The terms that occur in the immediate vicinity of the named entities as co-occurrences can be linked to them. From this, attributes as well as other entities to the main entity can be extracted from the content and stored in the respective entity profile. The proximity between the terms and the entity in the text as well as the frequency of the occurring main-entity-attribute-pairs or main-entity-next-entity-pairs can be used as validation as well as weighting.

Thus, Google can constantly enrich the entities in the Knowledge Graph with new information.

Below I have researched Google patents and other sources to find approaches to meet completeness (recall), validity, and timeliness.

Closed vs. open extraction of information

Before I get into the specific approaches I would like to briefly discuss the two broad types of extraction. Open and Closed Extraction. In closed extraction, the premise is that the entities with a URI are already captured and they are completed or updated in terms of new attributes and relationships to other entities. Open extraction additionally involves the identification and capture of previously unknown or unrecorded entities and their attributes. This concerns the completeness of the entire knowledge databases not only the completeness of attributes and relationships per entity.

Example process for a closed extraction of facts/information

In the Google patent Learning objects and facts from documents a process is explained how Google can capture new information about already captured entities/objects without human intervention.

In the Google patent Learning objects and facts from documents a process is explained how Google can capture new information about already captured entities/objects without human intervention.

Abstract

Tail entity detection

Basically there are three ways how Google can detect and capture tail entities. The previous way via structured data e.g. from Wikidata or the extraction from online content of various blogs, websites, social networks … or manually via Json-ld or microdata excellent structured data.

I think it was Google’s plan back then to extend the Knowledge Graph regarding tail entities via Google+ and the Authorship mark-ups. We know how that ended at the latest with the shutdown of Google+ in April 2019.

However, I think these ways are only an interim solution, as the creation and verification still has to be done manually. Thus, it is not a scalable solution that Google will prefer. Google has been trying to push structured data markup by webmasters and SEOs in recent years. To me, there is only one reason for this: they want to provide their machine learning algorithms with as much human-validated structured data as possible as training data. The goal is to eventually no longer need structured data.

One way for Google for the future must be to automatically identify new entities from freely available documents, interpret them and create them in the Knowledge Graph. The following steps could be taken for this purpose:

- Identification of potential entities (Named Entity Recognition)

- Extraction of classes and types: Assignment of the entity into one or more semantic classes and types based on the context in which the entity is repeatedly named. This would allow initial attribute sets to be stored, which are gradually completed with values.

Tip for nerds!

Here is a tutorial for a simple implementation via Python to build yourself:

Training spaCy’s Statistical Models

Example for numerical patterns to build yourself in Python:

- Extraction of relationships: Establishing relationships to other already recorded entities with similar or the same patterns or in similar contexts Finding mention.

Tip for nerds!

For Python savvy nerds here is a tutorial, the implementation based on Google’s embedding technology:

In some Google patents one finds clues how Google could solve this problem in the future. The patent Computerized Systems and Methods for Extracting and Storing Information regarding Entities gives the following approach to extract new entities including attributes and associated entity classes from documents.

The first step is to check whether a document contains one or more already known or new entities. If the entities are already known, it is checked whether the content contains attributes that were not previously associated with the entity. If these are found to be suitable, the already stored entity is updated with the new attributes.

If potential new entities are discovered, they are placed in the context of the content. In order to classify the previously unknown entity into one or more entity classes, already known entities are searched for in the content that have similar attributes or appear to be adjacent. Using the context and the entity classes or their attributes, a clearer picture of the semantic context of the new entity emerges.

A hierarchical class membership can be determined via a relationship scoring.

This can then be stored in the graph including initial attributes, relationship to other entities and entity classes or types.

The patent was updated in February 2019 and has a term until 2037, which suggests that Google is working with it.

Another Google patent from 2019 titled “Automatic Discovery of new Entities using Graph Reconciliation” describes another method to extract and verify new entities including attributes and relationship types from unstructured online sources.

The method describes how Google could extract single entity graphs from website content discovered tuples of subject entities, object entities, and predicate phrases. These individual graphs are grouped by entity types so that they can be better compared with.

Facts can be verified via matching the different entity graphs. Tuples or facts that occur frequently in the individual graphs are more credible than facts that occur only sporadically. As soon as the number of identical mentions exceeds a threshold value, they are verified as correct. All verified facts for the respective entity are merged into a new entity graph and stored in the knowledge graph.

Named Entity Recognition

The recognition of named entities in sentences, paragraphs and whole texts is the very first process step in the generation of information for a Knowledge Graph. The most common method for this is supervised and semisupervised machine learning. Via manually assisted machine learning, a passable value can be achieved with respect to the recall or degree of completeness of entities in a Knowledge Graph measured against the totality of all entities. The better training data for entities of a certain ontology like e.g. persons, organizations, events … are annotated or tagged the better the result. This distinction is the manual support of the algorithm. Scientific experiments have shown that a seed set of only 10 manually entered facts can lead to a precision or correctness of 88% for a corpus of several million documents.

For named entity recognition, typical features such as part of speech (noun, object, subject) or co-occurrences with specific information can help.

Tip for nerds!

Here are some instructions for easy implementation via Python:

Thus, an entity from the class Actor is often referred to in connection with phrases such as has won the Golden Globe, is a Movie Star, is nominated for the Golden Camera, has acted in movie xy … mentioned. Word properties such as the character type as well as number or word ending can also be at least indications of an entity class, entity type, or attribute value. For example, languages usually end in “ish” or years consist of 4 numbers.

Other features can be via matching with nouns found in dictionaries or co-occurrence with certain terms. Thus, a direct co-occurrence with the term GmbH indicates that it could be a company. The term combination “FC + city” leads to the assumption that it is a soccer club.

However, a characteristic by itself is often not an indication or even proof. Only the combination of different characteristics gives certainty.

More about Entity Recognition via Natural Language Processing in my article NATURAL LANGUAGE PROCESSING TO BUILD A SEMANTIC DATABASE.

Extraction of events

The extraction of events is about identifying current events and their meaning. Especially for Google News and its display in the normal SERPs as well as for the detection of seasonal events this plays a major role.

Google can recognize current events on the one hand via a sudden increase in the number of search queries for an entity and/or a combination of entities and event trigger terms. Or Google can detect an event via co-occurrences of these features in recent reports in news magazines, blogs …

Events and entities are closely related. Entities are often actors or participants in events or happenings and events without entities are uncommon. Interpretation of events and entities is highly context dependent. That is why modern methods for interpreting events are always based on the immediately mentioned entities and event typical trigger terms. Such trigger terms can be e.g. “fire”, “bombs”, “dead”, “attack”, “victory”, “won”, earthquake”, “catastrophe” …

Here I found an example of an event related to the entity Snoop Dogg on Google:

Machine Learning as a central technology for processing unstructured data

A central technology for the extraction of information and Natural Language Processing is Machine Learning in its various manifestations.

The main tasks involved in processing unstructured data sources include.

- Extraction of information from websites

- Named entity recognition (NCE)

- Extraction of relations (Relation Extraction)

- Extraction of events (Event Extraction)

- Recognition and extraction of types & classes and mapping of entities

To refine the mapping and training, methods such as lemmatization and composite decomposition are applied.

Assignment of new entities to classes and types via Unsupervised Machine Learning

One method to contextualize previously unknown entities or to assign entity classes or entity types is clustering. This can be done using Unsupervised Machine Learning algorithms.

In this process, terms recognized as potential entities in a text are assigned to types or classes with respect to the indirect or immediate term environment in which they are mentioned. This is done by matching them with co-occurrence patterns that the algorithm has identified from the analysis for certain classes. Vector space analyses can also be used for this purpose, in which the angle of the vectors is determined depending on the proximity of the terms to the searched entity.

This method can also be used to identify previously unrecognized entities. For example, from a feed of news such as Google News, one could thematically contextualize the co-occurrences of already captured named entities with previously unknown potential entities and automatically assign types and classes.

The complex thing here is to find a suitable threshold, from which an entity is so similar to another or so close to it (e.g. within a vector space -> word embeddings) that it is considered by Google to belong to it. That a generalistic threshold is used is also rather unlikely, possibly they are even as granular as entities.

If, for example, a previously unknown new car brand is repeatedly mentioned in messages together with known entities such as VW, Mercedes and Toyota, it is obvious that this is to be assigned to the entity class or type car manufacturer and that this new entity can therefore be associated with the attributes common to this class. This may also change the patterns for validating new entities and the thresholds.

Methods for ensuring topicality

To certain entities and concepts topicality is not a big problem, because the facts or attributes do not change for decades or centuries like for example buildings, events from the past, historical personalities … But there are entities that are in constant change like currently living persons, political offices …

Here, so-called Knowledge Base Acceleration systems (KBA systems) can enable a timely update of the data records.

From a continuous content stream of newly published reference-worthy content such as news articles, blog posts or tweets, entity-relevant content is imported in real time into a KBA system for analysis. These documents are either fed directly to the editors of the database or the facts are extracted first and then fed to the editors.

While the identification of relevant documents is a simple information retrieval process by entity name including synonyms, the automated process of extraction is somewhat more complex.

Here the methodology of Closed Information Extraction can be used. This methodology is limited to the detection of changes in the facts of already recorded data records.

With Closed Information Extraction, the extension or update can be started hierarchically from top to bottom, i.e. starting with the semantic class or entity type, or vice versa from bottom to top, starting with the entity.

The Google patent Extracting information from unstructured text using generalized extraction patterns describes a method how to continuously extend a database with new information from unstructured text based on seed facts extracted from single sentences. The patent is not described in terms of entities but could also be used to complete the attributes and relationships of entities.

Abstract

The Knowledge Vault as Knowledge Graph 2.0

The Knowledge Vault is a knowledge base that combines extractions from web content (by analyzing text, table data, structure and human annotations e.g. via Json-LD, Microdata) and information from existing knowledge bases like Wikidata, YAGO, DBpedia … combined with each other.

In many posts there has been speculation about whether Google’s Knowledge Vault will eventually replace the Knowledge Graph. I see the Knowledge Vault as Knowledge Graph 2.0 and perhaps the vault was only a vision of google replaced by another innovation. But the principle behind the knowledge vault is the future of the knowledge graph.

For the extraction of information, the Knowledge Vault uses structured data sources like Wikidata (formerly Freebase), semi-structured data sources like Wikipedia, as well as unstructured web content. A fusion module merges this data and processes it via supervised machine learning before transferring it to the Knowledge Graph / Knowledge Vault.

While classical Knowledge Graphs primarily retrieve information only from structured or at least semi-structured data sources, the Knowledge Vault is able to process information in a scalable way also from information maintained independently by hand. Here is an excerpt from the scientific paper Knowledge Vault: A Web-Scale Approach to Probabilistic Knowledge Fusion.

Here we introduce Knowledge Vault, a Web-scale probabilistic knowledge base that combines extractions from Web content (obtained via analysis of text, tabular data, page structure, and human annotations) with prior knowledge derived from existing knowledge repositories. We employ supervised machine learning methods for fusing these distinct information sources. The Knowledge Vault is substantially bigger than any previously published structured knowledge repository, and features a probabilistic inference system that computes calibrated probabilities of fact correctness.

The Knowledge Vault is being developed as a result of the stagnant growth of human-maintained data sources, such as Wikipedia, and the need to tap new sources of information to achieve the goal of a near-complete knowledge database without sacrificing information validity.

Therefore, we believe a new approach is necessary to further scale up knowledge base construction. Such an approach should automatically extract facts from the whole Web, to augment the knowledge we collect from human input and structured data sources. Unfortunately, standard methods for this task (cf. [44]) often produce very noisy, unreliable facts. To alleviate the amount of noise in the automatically extracted data, the new approach should automatically leverage already-cataloged knowledge to build prior models of fact correctness. Quelle: https://www.cs.ubc.ca/~murphyk/Papers/kv-kdd14.pdf

Thus, the Knowledge Vault follows the principle of open extraction.

Tip for nerds!

Here you can find a tutorial for fully automated extraction without the need for manual fine-tuning.

The path ranking algorithm can be used to identify typical relationship patterns between entities in structured data sources such as Wikidata. For example, entities related by the predicate “married to” are often related by the relationship value “parents of” to other entities and vice versa. These frequently occurring relationships can then be captured as rules.

Tip for nerds!

Here you can find a tutorial for the implementation on word and token level.

For the evaluation of information, the Knowledge Vault can determine whether a piece of information is correct or incorrect via correlations of the information and the evaluation of the source. In addition, the Knowledge Vault is significantly larger than traditional knowledge bases due to automated open extraction.

To create a Knowledge Graph of this size, facts are extracted from a variety of sources such as web data, including free text, HTML DOM trees, HTML web tables, and human annotations to web pages. The human annotations are via JSon-LD, schema.org…. AWARENED CONTENT.

The Knowledge Vault consists of three core components:

- Extractors: this component extracts the RDF triples or statements of object, predicate and subject already mentioned above from freely available documents, provides them with a confidence score to measure the credibility of the triple.

- Graph based priors: Based on the frequency of existing RDF triples in the database, this component checks the probability that this statement is relevant.

- Knowledge Fusion: Based on the information from the previous steps, this component checks whether the statement is correct and will be stored in the Knowledge Graph or not.

Conclusion: Google is only at the beginning in the extraction of unstructured information

The approaches and methods explained in this article for extracting unstructured information for the Knowledge Graph are already applicable and the Knowledge Vault plays the central role here. To what extent these are already being used remains speculation.

However, practice shows that Google has so far only made very limited use of unstructured information, at least as far as playout in the Knowledge Panels is concerned. The first practical applications can be found in the Featured Snippets, although this looks more like the direct use of Natural Language Processing than the use of the Knowledge Graph.

Even for entities not previously included in the Knowledge Graph, Google is currently only working with NLP to identify them, independent of the Knowledge Graph. For the identification of entities and thematic classification, Natural Language Processing performs well. However, this would only guarantee the criterion of completeness or topicality. NLP alone does not guarantee the claim of correctness.

I think that Google is already quite good in the area of Natural Language Processing, but does not yet achieve satisfactory results in the evaluation of automatically extracted information regarding correctness. This will probably be the reason why Google is still acting cautiously here, as far as direct positioning in the SERPs is concerned.

That’s why I would like to deal more in detail with the topic of NLP or Natural Language Processing in search in the next post.

Credits: The links to the Nerd Tips tutorials are provided by Philip Ehring. Philip is a trained and studied librarian and works in the focus of semantics, information retrieval and machine learning as a data engineer / data scientist in the text mining team of Otto Business Intelligence. The practical evaluation of Google and the individual components of the search engine with a view to search engine optimization has been an integral part of his career since his internship at Aufgesang.#

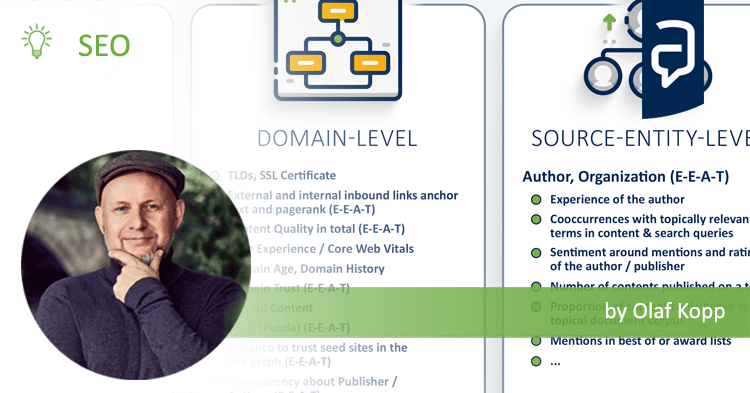

- The dimensions of the Google ranking - 25. April 2024

- Interesting Google patents for search and SEO in 2024 - 3. April 2024

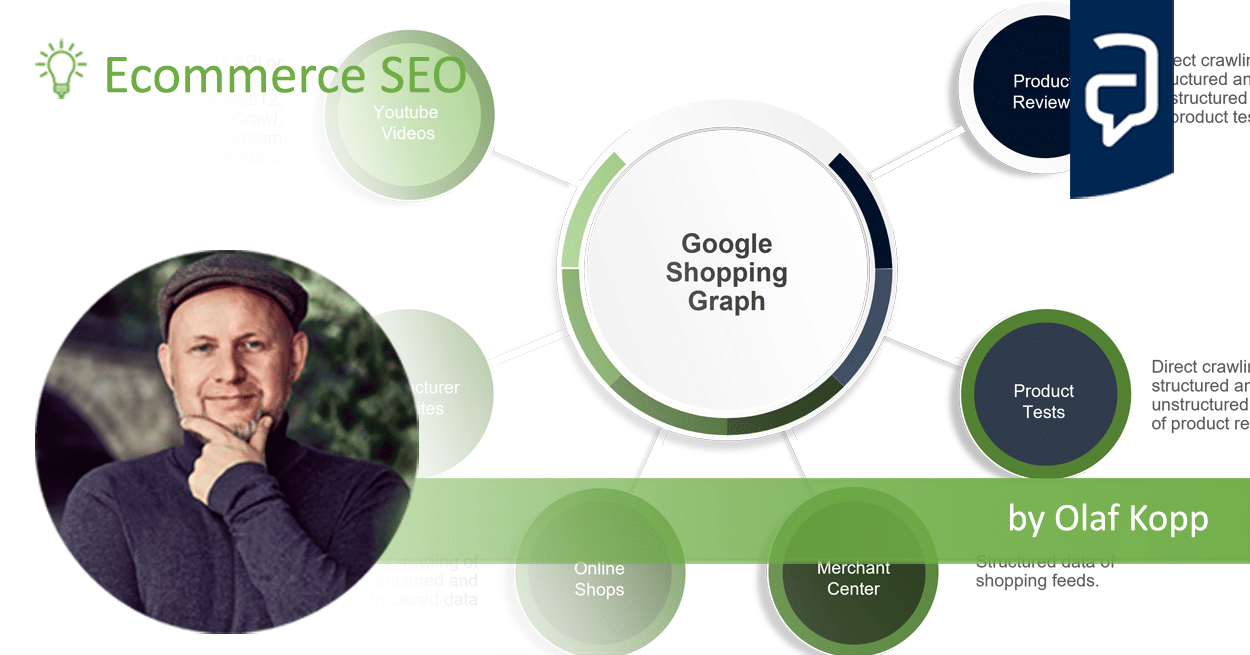

- What is the Google Shopping Graph and how does it work? - 27. February 2024

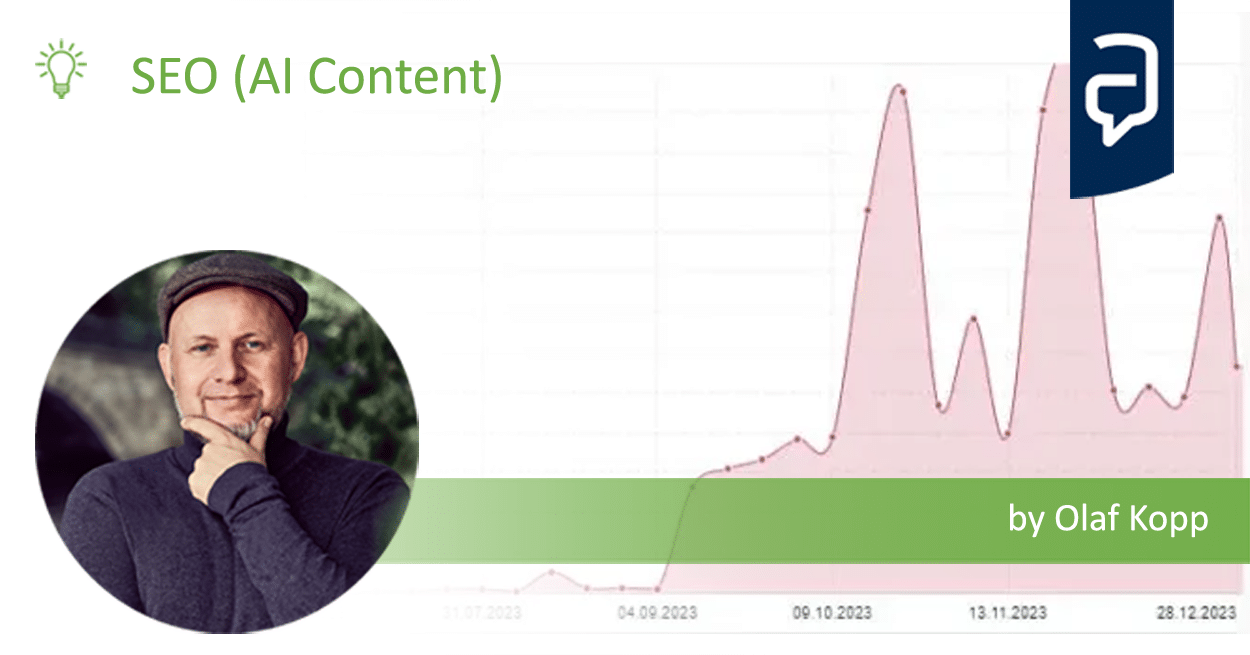

- “Google doesn’t like AI content!” Myth or truth? - 19. February 2024

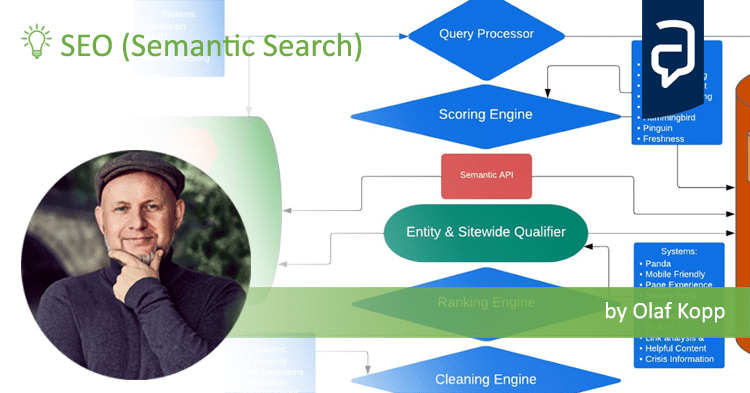

- Most interesting Google Patents for semantic search - 12. February 2024

- How does Google search (ranking) may be working today - 4. February 2024

- Success factors for user centricity in companies - 28. January 2024

- Social media has become one of the most important gatekeepers for content - 28. January 2024

- E-E-A-T: Google ressources, patents and scientific papers - 24. January 2024

- Patents and research papers for deep learning & ranking by Marc Najork - 21. January 2024

Here is a tutorial for a simple implementation via Python to build yourself:

Here is a tutorial for a simple implementation via Python to build yourself: